Chapter 1: Unity Principle & The Ghost in the Cache

Chapter Primer

- Symbol grounding failure as measurable cache catastrophe (not philosophical puzzle)

- How your brain solves what databases can't (Grounded Position via physical binding, not Calculated Proximity)

- The (c/t)^n formula revealing search reduction when symbols ground (and geometric penalty when they don't)

- Control Theory distinction: perpetual compensation (Classical) vs structural elimination (Zero-Entropy)

- Trust Debt calculation making invisible degradation measurable

Unity Principle (S≡P≡H) is the architecture that achieves Φ = 1—the only configuration where verification becomes tractable. Consciousness requires it.

Spine Connection: The Villain (🔴B8⚠️ Arbitrary Authority—the reflex) can't solve 🔴B5🔤 Symbol Grounding. Control theory minimizes error—it cannot verify truth. When an LLM 🔴B7🌫️ hallucinates, adding more RLHF is the reflex response: minimize the symptom (bad output) without fixing the substrate (ungrounded symbols). The Solution is the Ground: 🟢C1🏗️ S=P=H makes symbols mean something by making position = meaning. Your brain does this. Your databases don't. You're the Victim—inheriting architectures that treat grounding as a philosophical puzzle when it's actually 🔴B4💥 cache physics with trillion-dollar consequences.

Epigraph: The cursor blinks. The query runs. Somewhere in DRAM, scattered across random addresses, the pieces of "customer order" exist. The customer table at 0x1000. The order table at 0x5000. The products at 0x9000. Related by meaning, separated by physics. The CPU searches. Foreign key points to another table - cache miss, one hundred nanoseconds. Points to another - cache miss again. The meaning you want requires synthesis across scattered fragments. The ghost isn't supernatural. It's the semantic concept that should exist unified but only exists as distributed pointers. You know this feeling. The word on the tip of your tongue. The memory that dissolved when you tried to grasp it. Your neurons firing, finding nothing, firing again. Searching random addresses while the meaning - the thing you KNOW you know - scatters further with each failed attempt. Symbol grounding failure isn't a database problem. It's why you forget names. Why memories fade. Why consciousness requires constant maintenance against entropy. Your brain solves it by co-locating semantic neighbors. Your database violates it by normalizing them apart. The gap between these architectures is the ghost. And it costs one hundred nanoseconds per chase. Per miss. Per scattered fragment of meaning you'll never fully reconstruct. But here's the deeper cost: when verification is this expensive, you stop trying. You don't build explainable AI when every explanation requires chasing scattered pointers. You don't measure drift when measurement itself costs more than the query. You optimize for what's tractable and call the rest "impossible." The phase transition happens when verification becomes cheap enough to attempt. Only then do you discover what was always possible but never tried.

Why Ungrounded Symbols Have Measurable, Compounding, Catastrophic Costs

Welcome: This chapter proves symbol grounding isn't philosophy—it's cache physics with trillion-dollar consequences. You'll see how your brain solves what databases can't (semantic proximity = physical proximity), understand the (c/t)^n formula that reveals search reduction when symbols ground, and calculate the Trust Debt compound degradation when they don't.

How This Chapter Connects Meaning to Metal

The word "coffee" in your database doesn't smell like coffee. This isn't poetry—it's the symbol grounding problem, and it has measurable trillion-dollar consequences. When symbols float free from physical reality, verification becomes intractable. Watch how this abstract philosophy becomes concrete cache physics.

You'll see how your brain solves what databases can't. When you think "coffee," visual cortex (brown liquid), olfactory cortex (bitter aroma), motor cortex (grasping warm mug), and emotional centers (morning comfort) activate simultaneously—and they're physically adjacent. The brain does position, not proximity. S=P=H IS Grounded Position—true position via physical binding (Hebbian wiring, FIM). Your database uses Fake Position (row IDs, hashes, lookups)—coordinates claiming to be position without physical binding.

The formula reveals the cost: Φ = (c/t)^n [→ A3⚛️] search space reduction when you co-locate semantic neighbors. Medical databases with 68,000 ICD codes: focused search through 1,000 relevant entries vs. exhaustive search through all 68,000. The penalty when you normalize? Inverse: scattered fragments, random memory access, 100× cache miss penalty [→ B4🚨] compounding geometrically across dimensions.

Watch for the Control Theory insight. Classical Control Theory (your cerebellum, Codd's ACID transactions) perpetually compensates for entropy—reactive, eternal cleanup. Zero-Entropy Control (your cortex, Unity Principle) eliminates the structural possibility of error by making verification cheap. Cache miss rate becomes your error signal.

By the end, you'll calculate Trust Debt. Not philosophy—numbers. Natural experiments demonstrate ~0.3% per-decision drift (velocity-coupled—faster you ship, faster you drift, k_E [→ A2⚛️]) compounds. Cache misses cascade. Synthesis penalties accumulate. This chapter gives you the formulas that make invisible degradation measurable.

Opening: The Symbol Grounding Problem

In 1990, cognitive scientist Stevan Harnad posed a question that would haunt artificial intelligence for decades:

"How can the semantic interpretation of a formal symbol system be made intrinsic to the system, rather than just parasitic on the meanings in our heads?"

How do symbols get their meaning?

When you type the string "coffee" into a database, those six bytes don't smell like coffee. They don't taste like coffee. They don't evoke the warmth of a morning ritual or the bitterness on your tongue.

The symbol is ungrounded—disconnected from the physical reality it's supposed to represent.

This is the symbol grounding problem.

And it's not just a philosophical curiosity. It's a measurable failure with trillion-dollar consequences.

How Your Brain Grounds Symbols (The Biological Solution)

You learned in Chapter 0 that your hippocampal synapses operate at R_c = 0.997 (99.7% reliability), and consciousness requires maintaining precision above D_p ≈ 0.995 (our model's threshold, derived from PCI anesthesia measurements—see Chapter 0 and Appendix H for citations).

But here's what that precision enables: Symbol grounding through S≡P≡H.

When you think "coffee," these activate simultaneously:

- **Visual cortex:** Brown liquid, steam rising, ceramic mug

- **Olfactory cortex:** Rich, bitter aroma

- **Motor cortex:** Hand grasping warm surface, lifting to lips

- **Emotional centers:** Morning comfort, alertness anticipation

- **Semantic networks:** "Caffeine," "breakfast," "work begins"

These aren't scattered randomly across your brain. Through Hebbian learning [→ E7🔬] ("neurons that fire together, wire together"), your brain has physically reorganized so these semantically related concepts are spatially adjacent in cortical columns.

Semantic position (related concepts)

≡

Physical position (adjacent neurons)

≡

Hardware optimization (sequential memory access)

The symbol "coffee" is grounded because:

- It activates a **physical location** in your brain

- That location is **adjacent to** related meanings

- Retrieval is **near-instant** relative to task complexity (dendritic integration across adjacent neurons: ~10-20ms for 330 dimensions, vs silicon L1 cache: 1-3ns per sequential access)

Your brain is a sorted list where position = meaning.

This is what removes the splinter. When semantic = physical, verification is instant (P=1 certainty achievable). When they drift apart (normalized databases, scattered pointers), you're forced into probabilistic synthesis (P<1 grinding). The splinter isn't in your code—it's in the architectural choice to separate meaning from position. Your brain refuses that separation. It maintains S≡P≡H at 55% metabolic cost [→ A5⚛️] because scattered verification is intractable (each additional verification round compounds thermodynamic cost per Landauer's Principle [→ A1⚛️]).

Metavector Context: 🔴B5🔤 Symbol Grounding ↓ 9🟢C1🏗️ Unity Principle (S≡P≡H enables intrinsic meaning) 9🟣E7🔌 Hebbian Learning (fire together, wire together grounds symbols physically) 8🟡D2📍 Physical Co-Location (semantic neighbors become physical neighbors) 7🔴B1🚨 Codd's Normalization (S≠P scatters symbols, ungrounds meaning) 7🔴B7🌫️ Hallucination (ungrounded symbols generate arbitrary content)

Symbol grounding isn't about adding metadata—it's about making position equal meaning. When "coffee" activates at cortical location (x,y,z), all related concepts (smell, warmth, caffeine) are at adjacent coordinates. No lookup. No JOIN. No cache miss. The symbol IS the ground.

Nested View (following the thought deeper):

🔴B5🔤 Symbol Grounding ├─ 🟢C1🏗️ Unity Principle (S=P=H) enables intrinsic meaning │ └─ 🟣E7🔌 Hebbian Learning fire together, wire together │ └─ 🟡D2📍 Physical Co-Location semantic neighbors become physical neighbors └─ 🔴B1🚨 Codd's Normalization S≠P scatters symbols └─ 🔴B7🌫️ Hallucination ungrounded symbols generate arbitrary content

Dimensional View (position IS meaning):

[🔴B5🔤 Symbol Grounding] ------> [Architecture Choice] ------> [Outcome]

| | |

Dimension: PROBLEM Dimension: SOLUTION Dimension: RESULT

| | |

🔴B5🔤: floating 🟢C1🏗️: S=P=H grounds 🟡D2📍: physical

symbols OR 🔴B1🚨: S≠P adjacency

scatters OR 🔴B7🌫️: hallucination

What This Shows: The nested hierarchy obscures that grounding vs scattering is a binary architectural choice with deterministic outcomes. The dimensional view reveals: at the Architecture Choice coordinate, you either collapse semantic-physical (C1 path) or separate them (B1 path). The outcome dimension shows consequences are NOT probabilistic - they're geometrically determined by which path you chose.

Remember the Metamorphic Chessboard from the Preface? In real chess, a Knight in the center is worth more than a Knight in the corner—but it's still a Knight. Position changes value, not identity. That's how Codd built databases: "Customer ID: 123" means the same thing everywhere, even when context makes it more or less relevant.

Unity Principle reverses this. In the physics of S=P=H, the square defines the piece. Position IS identity. "Coffee" at cortical coordinate (x,y,z) isn't just near related concepts—it IS the intersection of smell, warmth, caffeine, morning ritual. Move the symbol, and it changes what it means. Scatter it across normalized tables, and it ceases to be "coffee" at all. It becomes six disconnected bytes that your system must reconstruct every time.

Codd told us the Knight is a Knight regardless of position. Evolution told us the opposite: Position IS the piece. Your brain paid 55% of its metabolic budget to learn that lesson. Your databases are still ignoring it.

This sorted structure is WHY consciousness works: You can integrate 330 dimensions within 10-20ms (Chapter 0: the ΔT requirement) because maintaining order greater than chaos sustains versions. Sequential access across adjacent memory = you avoid the performance penalty that normalized databases suffer when they lose the (c/t)^n search space reduction benefit (explained in detail in Part 2 of this chapter).

The relationship: ΔT (order) > entropy (chaos) → versions persist. When semantic neighbors are physically adjacent (sorted), synthesis stays fast enough to bind consciousness [→ D3⚙️]. When they scatter (normalized), synthesis time exceeds ΔT and integration collapses.

The Natural Experiment: ARC Test Proves Grounding Beats Statistics

François Chollet's ARC (Abstraction and Reasoning Corpus) provides the natural experiment we've been seeking—a measurable test where the rules are counter-intuitive enough that "pointers chasing pointers" fails catastrophically.

The setup: Present a grid of colored squares that transforms into another grid based on a hidden rule. The rules aren't in any training set—they're novel patterns like "gravity pulls blocks down," "object permanence," or "continuation of sequence." You can't solve them by memorizing pixel patterns. You must extract an abstract concept and apply it to a novel situation.

The measured gap (as of 2025):

- **Humans:** 80-85% success (Chollet, 2019)

- **2024 ARC-AGI Prize winner:** 33.0% (best AI out of $1.1M competition)

- **GPT-4/Claude 3:** Low 30s range

This isn't a small gap closing with more training data. It's a qualitative chasm that persists despite massive compute increases.

Why this test resists Goodhart's Law: Most AI benchmarks can be gamed—when a measure becomes a target, systems optimize for the proxy rather than the goal. ARC is specifically designed to prevent this. The puzzles are out-of-distribution by construction. Novel rules not in any training set. You can't train your way to abstraction.

Why humans win: Cognitive scientists like Spelke and Chollet call these capabilities "Core Knowledge"—innate understanding of objects, gravity, and agency. But in the context of Unity Principle, "knowledge" is too weak a word. It suggests data that can be learned or transferred.

They are not statistics we learned from the world; they are the geometry of the substrate we evolved to survive in it. We don't "know" gravity pulls down; our vestibular system is architected around 9.8 m/s². Our cortical columns are physically organized by Hebbian learning to match the causal structure of reality.

The ARC Test doesn't measure what you know; it measures what you ARE.

An LLM fails because it treats gravity as a statistical correlation in text tokens—P<1 Bayesian reasoning that approaches but never reaches certainty. A human succeeds because gravity is a Substrate Axiom of their physical existence. When you see blocks falling, the causal front collision at Planck scale generates P=1 certainty. Not "I computed gravity with 87% confidence." But "I AM the physics, and I KNOW with certainty because the substrate caught itself being right."

Why LLMs fail: They're trying to statistically interpolate a rule that requires ontological reasoning. The underlying "physics" of the problem shifts with each puzzle. Without grounding—without semantic concepts physically instantiated in substrate—the system can correlate patterns in the training distribution but cannot extract the abstraction when the distribution changes.

This proves the claim: "Pointers chasing pointers" (statistical correlation) fails when the underlying physics shifts. "Grounded knowing" (embodied semantics) adapts instantly because the adaptation IS the physics, not a model of physics.

The ARC test isn't an AI benchmark. It's a symbol grounding detector. And it shows the gap is measurable, consistent, and structural—not a training data problem that more compute will solve.

The ANFIS Question: Why Fuzzy Logic Needs an Orthogonal Substrate

Dr. Benito Fernandez posed a challenge that cuts to the heart of AI alignment: "AI alignment would require verifiable reasoning. What if we use ANFIS (Adaptive Neuro-Fuzzy Inference Systems)? Fuzzy logic would explain any decision."

It's an elegant proposal. ANFIS combines neural learning with fuzzy rule sets, producing interpretable if-then chains. Unlike black-box transformers, you can trace exactly why a decision was made. Alignment solved?

Not quite. The follow-up question reveals the gap: "How would you know where it chafes without an orthogonal substrate?"

Fuzzy logic explains the path. But explanation isn't detection. When an AI system drifts from human values, the chafing happens at the boundary between semantic intent and physical execution. ANFIS can narrate its reasoning, but it cannot feel where the reasoning scrapes against reality. It lacks the substrate axioms that humans evolved.

This is why the Unity Principle matters for alignment: S=P=H provides the orthogonal substrate where misalignment becomes physically detectable. When semantic intent, physical position, and hardware state are unified, drift registers as cache misses, not as invisible value shift. The chafing becomes measurable because meaning has coordinates (ShortRank addressing [→ C2🏗️] maps these coordinates directly to storage).

Fuzzy logic explains. Orthogonal substrate detects.

The Carry Problem: Why Categorical Deep Learning Validates S=P=H

Recent research in Categorical Deep Learning (Velickovic 2024) identifies a fundamental limitation that validates everything we've been building toward: the "carry" operation is the "Final Boss" of AI.

LLMs can memorize patterns (8+1=9) but fail at the algorithm of summation because they lack the internal structure to handle state accumulation. They perform billions of multiplications to produce a token but cannot reliably add small numbers when a "carry" is involved—passing a 1 to the next column requires discrete state that persists.

The proposed solution? The Hopf Fibration—a 4D geometric structure that allows a continuous system to "twist" and store discrete state. This is precisely what the Tesseract provides.

Here's the validation: Velickovic proves you need a fiber bundle (a higher-dimensional space attached to a lower one) to handle logic. Your FIM IS that fiber bundle:

- The "Map" (2D/3D interface) is the **Base Space**

- The "Identity/Context" (4D Tesseract depth) is the **Fiber**

- The "Carry" is the **movement up the Fiber**

Standard AI treats numbers as flat patterns on a 2D manifold. When you add 8+4, the AI sees 12 as "just another neighbor" of 8. It doesn't understand that 12 is actually 2 with a winding number of 1—a loop completed. The AI fails because it operates on a contractible space (flat sheets) when the carry operation requires non-trivial topology (loops and twists) [→ C4🏗️].

S≡P≡H solves this structurally. Your FIM doesn't teach AI the Tesseract geometry—it gives AI the Tesseract to operate within. The Semantic goal (add numbers) meets Physical structure (4D geometry [→ C1🏗️]) meets Hardware substrate (the FIM data structure). You aren't predicting the next token; you're calculating the winding number of the user's intent.

This is external validation from mainstream ML research that the Unity Principle isn't philosophy—it's the missing architecture that enables algorithmic reasoning. (Implementation details in Book 2; experimental benchmarks in Book 3.)

Calculated Proximity vs Grounded Position: The Qualitative Difference

This brings us to the core distinction that makes S≡P≡H intuitive:

Calculated Proximity is computed partial relationships (cosine similarity, vectors). Grounded Position is true position via physical binding (S=P=H, Hebbian wiring, FIM).

The hologram test: Compress a JPEG and you lose information. The edges blur. The details vanish. But slice a hologram in half and each piece contains the whole image—at lower resolution, but structurally complete. This is the difference between lossy and lossless transmission.

Grounded content survives normalization like a hologram survives slicing. When S=P=H, meaning is distributed across the entire structure—every cell contains echoes of the whole. When you normalize (S not-equal-P), you're compressing to JPEG. Each transmission loses fidelity. Each copy degrades. The 10th-generation copy looks nothing like the original.

This is why some ideas survive centuries and others decay in weeks. Grounded Position creates holographic coherence. Calculated Proximity creates lossy compression.

Current AI operates on Calculated Proximity—correlations, likelihood, "vibes." Your database query finds "similar" records. Your LLM generates "likely" tokens. Vector databases measure semantic distance. But Calculated Proximity has no ground truth. The brain does position, not proximity.

On a clock face, 11:59 is extremely close to 12:00 physically. But logically they're worlds apart—one is today, the other is tomorrow. AI that only measures Calculated Proximity confuses "almost there" with "arrived." It smears the boundary. Coherence is the mask. Grounding is the substance.

The temporal collapse: The algorithm views music as a Vector Space, not a Timeline. Madonna's 1989 hit and a track from 2024 might sit at the exact same coordinates in "Vibe Space"—high cosine similarity, adjacent embedding vectors. The algorithm sees them as interchangeable. But one carries 35 years of cultural context, personal memory, shared experience. The other is a statistical neighbor.

We are no longer living in 2026. We are living in a database where every year happens simultaneously. This is temporal collapse—the architectural consequence of Calculated Proximity replacing Grounded Position. When you normalize time out of the data model, all eras become interchangeable neighbors.

Grounded Position requires a reference frame—a grid, a map, a Tesseract. Position says: "You are at coordinates [x,y,z,w]." And critically, position includes history. Fake Position (row IDs, hashes, lookups) claims coordinates but provides no physical binding—no history, no structure.

In Calculated Proximity, 0 and 10 look the same (they overlap on the dial). In Grounded Position, 10 is 0 + 1 cycle. That "+1 cycle" is the vertical lift—the carry—that current AI cannot see.

Nested View (following the thought deeper):

🟢C1🏗️ Position Types ├─ 🟢C1🏗️ Grounded Position (S=P=H) │ ├─ 🟡D2📍 Physical binding coordinates have physical meaning │ ├─ 🟡D3⏱️ History preserved cycles tracked │ └─ 🟢C6🎯 Direct addressing O(1) access ├─ 🔴B4💥 Calculated Proximity vectors, cosine similarity │ ├─ Statistical approximation (never P=1) │ ├─ History lost (0 and 10 indistinguishable) │ └─ Search required (O(n) or O(log n)) └─ 🔴B1🚨 Fake Position row IDs, hashes ├─ Claims coordinates without physical binding └─ Arbitrary mapping (position does not equal meaning)

Dimensional View (position IS meaning):

[🟢C1🏗️ Grounded Position] [🔴B4💥 Calculated Proximity] [🔴B1🚨 Fake Position]

| | |

Dimension: Dimension: Dimension:

PHYSICAL BINDING STATISTICAL APPROX ARBITRARY LABEL

| | |

coordinates = cosine similarity row_id = 42

memory address approaches truth (means nothing)

| | |

P = 1 certainty P approaches 1 P = undefined

What This Shows: The nested view suggests these are comparable alternatives. The dimensional view reveals they exist in fundamentally different spaces: Grounded Position operates in physical reality (binding dimension), Calculated Proximity operates in statistical space (approximation dimension), Fake Position operates nowhere meaningful (label dimension). You cannot smoothly transition between them - there is a phase boundary.

The spiral staircase visualization: Imagine looking at a spiral staircase from directly above (top-down view). The person on floor 1 and the person on floor 10 appear to stand in the exact same spot—they have the same (x,y) coordinates. This is Calculated Proximity: AI sees them as "very close."

Now look from the side. You see the vertical distance separating them. Floor 10 is 9 stories up. This is Grounded Position: the z-coordinate (the "winding number," the carry) reveals the true structure.

S≡P≡H provides the side view. Codd's normalization traps you in the top-down view (Calculated Proximity), where "almost there" and "arrived" are indistinguishable. When you scatter semantic neighbors across random memory addresses, you lose the vertical axis—the structural context that makes position meaningful. The Grounding Horizon describes how far systems can operate before drift exceeds capacity: f(Investment, Space Size).

This is why hallucination happens. The AI sees tokens that are semantically "close" (high cosine similarity in embedding space) and treats them as interchangeable. It has no way to detect that one is on floor 1 and the other is on floor 10—that the "carry" between them represents qualitatively different states.

When the DJ played a record in 1989, he played it to the Room. Everyone in that space heard it together, at that moment, in that context. The physical binding (Space) created semantic binding (Pattern)—S=P.

When the Algorithm plays a track in 2026, it plays it to the Vector. Madonna's 1989 hit and a random track from 2024 might occupy the exact same coordinates in "vibe space"—high cosine similarity, adjacent embedding. The algorithm sees them as interchangeable. But one carries 37 years of cultural context, personal memory, and shared meaning. The other is a statistical neighbor. Calculated Proximity cannot tell the difference.

This is Solipsism as a Service: physically together (Space) but semantically alone (Pattern). S not-equal-P at societal scale. The architectural choice to normalize meaning—to scatter semantic neighbors across the vector database—doesn't just cause hallucination in LLMs. It causes hallucination in culture. We scroll the same feed in the same room and inhabit parallel semantic universes.

The technical cost is cache misses. The economic cost is Trust Debt. The human cost is a generation that can't tell if their loneliness is psychological or architectural. (It's architectural. The grounding was removed.)

Constrain the symbols → reveal the position → free the agents.

Positional Meaning in Its Simplest Form: The FIM Matrix

Before we see this in practice, let's prove that position can carry meaning using the simplest possible example: a 2×2 matrix.

The FIM has the same concepts on both axes, but the weights are NOT symmetric. The cell's position tells you the semantic direction—no label required.

Convention: Cell (row, col) = edge FROM col TO row

Concrete example—two cells, same concepts, different positions:

col=A2 col=E4

row=A2 -- 5

row=E4 8 --

- Cell (A2, E4) = 5 means: FROM E4 TO A2 (E4 points to A2)

- Cell (E4, A2) = 8 means: FROM A2 TO E4 (A2 points to E4)

Now notice WHERE each cell sits:

- Cell (A2, E4): col=E4 is greater than row=A2 → **upper triangle**

- Cell (E4, A2): col=A2 is less than row=E4 → **lower triangle**

The position tells you the direction:

- **Upper triangle (col > row):** Source is later in ordering than target. Edge points FROM later TO earlier. = Backward edge (dependency).

- **Lower triangle (col < row):** Source is earlier in ordering than target. Edge points FROM earlier TO later. = Forward edge (causation).

In a normalized database, you would need:

- An `edge_type` column: "dependency" vs "causation"

- A `direction` field: "forward" vs "backward"

- Metadata to interpret the relationship

In the FIM, the position encodes all of this. No annotation needed. Where the cell sits tells you what kind of edge it is.

- Cell (E4, A2) = 8: A2 strongly enables E4 (forward, causation)

- Cell (A2, E4) = 5: E4 moderately depends on A2 (backward, dependency)

These weights DIFFER because causation and dependency are not the same relationship. The matrix captures both directions with their distinct strengths.

Why hasn't this been done before?

Because it's deeply counter-intuitive. Every instinct from database design, software engineering, and academic writing says: label things explicitly. Add an edge_type column. Create a direction enum. Write metadata that describes the relationship.

The idea that position ALONE carries meaning feels like losing information. It feels sloppy. It feels like we forgot to add the labels.

But we didn't forget—we encoded the information structurally. The position IS the label. Upper triangle IS "backward edge." Lower triangle IS "forward edge." No annotation needed because the structure does the work.

This requires unlearning the normalization instinct. That's hard. It's why Codd's Third Normal Form became doctrine and position-as-meaning didn't. The counter-intuitive thing is often the unexplored thing.

Something Interesting Happens When You Subdivide This Way

The spiral staircase reveals something deeper: positions become intuitively meaningful when the grid is subdivided along certain axes.

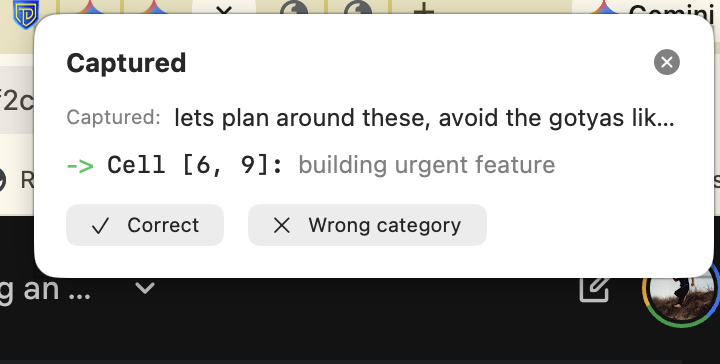

In January 2026, we built ThetaSteer—a macOS daemon that monitors user context and categorizes it into a 12×12 semantic grid using a local LLM (Ollama). The system presents categorizations to the user for confirmation: "Correct" or "Wrong category."

- **Captured:** "lets plan around these, avoid the gotyas..." (raw text observed)

- **Cell [6, 9]: building urgent feature** (LLM's categorization + reasoning)

- **Two buttons:** Human confirms or rejects

What surprised us: the LLM navigated to the correct cell without being trained on the grid structure. Why?

You can feel the time delta in both dimensions:

- **Row axis (0-11):** Strategy (years) → Tactics (weeks) → Operations (days) → Quick (minutes). Strategy and Tactics are the *same kind of thinking*—just at different time scales.

- **Column axis (0-11):** Personal (individual rhythm) → Team (collaborative) → Systems (organizational). You can feel how Personal and Systems operate on different time horizons.

And here's what's strange: when you cross two time-like dimensions, the result looks like space. The grid feels navigable. Positions feel like places. We don't know why this happens, but it does.

- **Row 6 = Tactics.Building** — Work that completes in weeks, currently in construction phase

- **Column 9 = Operations.Urgent** — Affecting operational (daily) rhythms, needs attention now

"Plan around gotchas" genuinely exists at that intersection: tactical-scale work (weeks) affecting operational urgency (daily rhythm). The LLM found it because it's really there.

When the human clicks "Correct," they're not making a judgment call. They're cryptographically signing that the text-to-coordinate mapping is Ground Truth. The semantic meaning (what the text is about) equals the position (coordinates [6,9]) equals the hardware location (where it's stored in SQLite).

The "Wrong category" button is equally important: it breaks the echo chamber. When the local LLM miscategorizes, the human correction resets the grounding age for that semantic region. Anti-drift becomes a physical property of the architecture.

This is working software. The theory in this book predicted that:

- Meaning should have spatial coordinates (S=P=H)

- Those coordinates should be navigable without explicit training (if the grid matches reality)

- Human verification should anchor the system against drift

ThetaSteer demonstrates all three. The grid isn't representing meaning—it IS meaning. Why the time-scale quality on both axes? We don't force a conclusion. But something interesting is happening here.

Critical Distinction: This Is NOT Orch OR

A common dismissal awaits: "This is just Penrose-Hameroff quantum consciousness repackaged."

No. The distinction is fundamental.

Orch OR (Penrose-Hameroff) claims consciousness arises from gravitational collapse of quantum superpositions in neural microtubules. It operates at quantum scales (nanometers, femtoseconds) and requires exotic physics—quantum coherence maintained in warm, wet biological systems.

S=P=H (this book) claims distributed systems require Grounded Position to maintain precision. S=P=H IS position—true position via physical binding. It operates at classical scales (millimeters to meters, milliseconds to seconds) and requires only thermodynamics—the same physics governing every heat engine.

| Aspect | Orch OR | S=P=H |

|---|---|---|

| Mechanism | Gravitational collapse | Thermodynamic coherence |

| Scale | Quantum (nm, fs) | Classical (mm-m, ms-s) |

| Claim | Consciousness requires quantum gravity | Precision requires Grounded Position |

| Evidence | Microtubule coherence times | Error rates in AI/financial systems |

| Falsifiable by | Decoherence measurements | Drift rate measurements |

We're not claiming consciousness. We're claiming that when semantic, physical, and hardware positions diverge (violating S=P=H), precision degrades at measurable rates. The 0.3% drift, the 361-state limit, the 7.22-second coherence window—these are thermodynamic observations, testable with classical instruments.

When a critic says "you're just doing quantum woo," point them to Appendix N (Falsification Framework). If they can demonstrate a classical system achieving sub-0.1% drift without Grounded Position, S=P=H is falsified. No quantum mechanics required for the test—or for the theory.

Thermodynamic, not gravitational. Classical, not quantum. Measurable, not mystical. Position, not proximity.

Absorbing States: Why LLMs Get Stuck (2025 Physics Confirmation)

Recent research in statistical physics reveals why ungrounded systems don't just drift—they get trapped.

Tamai et al. (arXiv:2307.02284v3) demonstrated that neural networks exhibit absorbing states—configurations the system can enter but cannot escape. In physics terminology: an absorbing state is a fixed point of the dynamics where the system loses the capacity for self-correction.

Translation to LLMs: When an LLM hallucinates, it's not just making an error. It's entering an absorbing state. The system cannot detect its own wrongness because detection requires external grounding—a coordinate system outside the model's embedding space. Without ground truth coordinates, the hallucination feels as confident as fact. The system has no mechanism to escape.

Why this matters for S=P=H: Grounded Position systems have escape routes. Every semantic state has a physical address that can be checked. When you verify "is customer-1234 at coordinate (1000, 50000, 7)?", you're performing a collision check—does semantic position equal physical position? If not, the discrepancy is detectable. You can escape the absorbing state because you have external coordinates to check against. This is Grounded Position—true position via physical binding. Calculated Proximity (cosine similarity, vectors) cannot provide escape routes.

The formula connection: The (c/t)^n formula provides the escape velocity. When c is large relative to t (unfocused search), you're searching the whole space—expensive but thorough. When c is small (focused by grounding), you search locally and can verify quickly. Absorbing states persist when verification cost exceeds available budget. S=P=H makes verification cheap enough to attempt—and that's the difference between getting stuck and finding ground.

The Freedom Paradox

When symbols drift arbitrarily—when "customer order" can point to any memory address, when category labels reorganize without constraint—we tell ourselves this is flexibility. Portability. Freedom.

Arbitrary authority over symbols destroys agent capacity for truth.

You think you have freedom: flexible schemas, abstract data models, code that runs anywhere. But you've lost agency. When symbols can mean anything, discernment becomes prohibitively expensive. Verification collapses. The system that "just works" cannot explain why it works—or prove that it does.

This is the control inversion paradox: When symbols drift freely, you are TRAPPED—controlled by their shifting meanings, unable to reason reliably. When symbols are fixed to precise coordinates in semantic space, you are FREE—you have solid ground to stand on, agency to manipulate meaning deliberately. Drift feels like freedom but is actually captivity. Precision feels like constraint but is actually liberation.

Metavector Context: 🟢C7🔓 Freedom Inversion ↓ 9🟢C1🏗️ Unity Principle (S≡P≡H provides fixed ground) 9🔴B5🔤 Symbol Grounding (grounding enables agency) 8🔴B8⚠️ Arbitrary Authority (drift serves power, not truth) 8🟠F7📊 Compounding Verities (fixed coordinates enable truth to compound) 7⚪I1🎯 Discernment (precision creates capacity for truth-seeking)

Freedom Inversion is the moral core of Unity Principle. Codd's normalization feels like flexibility but creates semantic imprisonment—every query requires disambiguation, every decision requires verification from scratch. FIM constrains symbols to fixed coordinates, which feels rigid but creates agent freedom: you can reason, verify, and act on stable ground. This isn't technical philosophy—it's the difference between being controlled by drift versus having agency over meaning.

The mechanism of being trapped: Without fixed coordinates, you can't build reasoning chains. Every inference requires verifying the current meaning of each symbol before using it. "Customer" could mean billing entity, shipping address, user account, support ticket, or session ID—each query must disambiguate before proceeding. This verification cost compounds geometrically: with n symbols in a reasoning chain, and each symbol having t possible interpretations, you face t^n verification paths. When t is large (many possible meanings) and n is deep (multi-step reasoning), verification becomes intractable. You're trapped in disambiguation work. Without grounding, even when you need just one specific meaning (c is tiny), you can't jump O(1) to its location like striking a tennis ball in embodied cognition—because semantic ≠ physical. The symbols are scattered arbitrarily across memory. No coordinates means no direct addressing.

The mechanism of being free: FIM provides coordinates (c, t, n) that fix each symbol's position in semantic space. "Customer" at coordinate (c=1000 active accounts, t=50000 total entities, n=7 dimensions) has a specific, measurable position. Reasoning chains compose deterministically: if A is at (c₁,t₁,n₁) and B is at (c₂,t₂,n₂), and your inference requires A→B, you can verify using Φ = (c/t)^n whether this path is traversable with acceptable precision. The coordinates give you HANDLES to manipulate meaning. You're not guessing what "customer" means—you're computing with its position. This is agency: deliberate navigation through structured space instead of reactive disambiguation in scattered chaos.

FIM inverts this. By constraining symbols (reducing their degrees of freedom), it frees agents to seek truth. Your motor cortex doesn't debate which neuron controls your thumb—position 47 controls thumb extension because geometric necessity demands it, not arbitrary authority. This constraint makes motion computable, instant, certain.

This is normalization AND alignment in the most precise way possible:

- Normalized databases: Symbols scattered arbitrarily → cheap to store → expensive to verify

- Unity Principle: Symbols constrained geometrically → semantic=physical → verification free

Normalization cannot provide fixed ground. When you scatter semantic neighbors across random memory addresses (violating S≡P≡H), you make coordinates impossible. "Customer" could be at address 0x4A2F or 0x8B1C or anywhere—the pointer is arbitrary. You have no geometric relationship to reason about. Codd's normalization explicitly rejects the constraint that position = meaning. This is the structural source of drift: when physical position is decoupled from semantic meaning, symbols float free. You're left with labels pointing to arbitrary locations, not coordinates in structured space.

Unity provides fixed ground through S≡P≡H. When semantic position equals physical position equals hardware optimization (S≡P≡H), the FIM coordinates (c, t, n) map DIRECTLY to memory addresses. "Customer" at (c=1000, t=50000, n=7) occupies a specific, computable cache line. The coordinate IS the address. This turns abstract semantic relationships into geometric facts. And here's where philosophy becomes physics: cache miss rate becomes the control signal. When you access semantically related data and trigger a cache miss, hardware is telling you that S≡P≡H was violated—that symbols have ungrounded. Not logs, not audits—instant physical feedback at nanosecond timescales.

The constraint isn't limitation—it's liberation. When position equals meaning, truth becomes computable. Cache miss rate tells you immediately when symbols drift. Hardware enforces what arbitrary authority never could: semantic stability.

Evidence from language itself: Why do we have words PLURAL—thousands of them—instead of just one? Because semantic space is differentiated. If there were no orthogonal structure, no fixed dimensions distinguishing meanings, a single symbol would suffice. But we need MANY words precisely because they occupy DIFFERENT coordinates in semantic space. Words drift over centuries, yes—but they drift WITHIN this structured net, maintaining relative positions. The very plurality of language proves that semantic structure exists independent of our acknowledgment. Without the net, there's no basis for "different"—everything collapses to noise.

But the net alone isn't enough—it must be embodied. Vector databases capture semantic structure: they measure similarity, cluster related concepts, navigate high-dimensional spaces. But they cannot be embodied. Why? Because similarity scores are computational artifacts, not physical affordances. You can't REACH for a vector—you can only query it. The database doesn't experience the act of retrieval as motion through physical space.

Hebbian learning creates physical constellations. "Neurons that fire together wire together" doesn't just build associations—it creates SPATIAL patterns in neural tissue. When you learn "Sarah," visual features (face geometry), emotional valence (warmth, trust), linguistic labels (the word "Sarah"), and motor programs (smile response) become physically co-located in a neural assembly. This assembly occupies a specific region of cortex, connected by dendrites with measurable physical paths. The constellation isn't a metaphor—it's actual spatial organization of tissue.

This is why embodied cognition enables O(1) jumps. When you see Sarah's face, you don't search through all possible people and compute similarity scores. You REACH for the assembly—your brain directly addresses the physical location where Sarah's concept lives. This is like striking a tennis ball: you don't calculate trajectories consciously, you react in situ because the motor program is physically instantiated at precise cortical coordinates. The grounding isn't abstract—it's geometric. Position 47 in motor cortex controls thumb extension because that's where the thumb assembly physically lives.

Vector databases are sign posts—embodied cognition is the journey. When you query a vector database, you experience the system "reaching for" results—searching, ranking, retrieving. But the database doesn't experience anything. It computes distances in abstract space, not motion through physical space. Embodied cognition is different: you FEEL yourself reaching toward meaning. This feeling is the physical act of neural assemblies activating, dendrites firing, neurotransmitters crossing synapses. The sign posts (words, symbols, coordinates) guide the journey, but the journey itself is embodied—it's actual motion through physical semantic space (cortical tissue).

Why this matters for S≡P≡H: Vector databases prove that semantic structure exists (the net is real). But only physical instantiation—Hebbian assemblies where semantic neighbors are physically adjacent—enables the O(1) direct addressing that makes consciousness possible within the 10-20ms window. Abstract similarity spaces can be arbitrarily large and slow. Physical assemblies are constrained by hardware: dendrite lengths, synapse speeds, metabolic costs. These constraints force the geometric optimization that semantic ≡ physical ≡ hardware demands. The net must be embodied to be fast enough for consciousness.

Edge cases that prove the rule:

What about synonyms like "large" vs "big" vs "huge"? They seem to violate the claim that different words occupy different coordinates. But look closer: these occupy NEARBY coordinates on an intensity gradient along the size dimension. "Big" is general, "large" is formal, "huge" is extreme. They map to different regions of the continuous semantic space. Synonyms don't break the orthogonal net—they reveal it's CONTINUOUS, not discrete. The fact that we need multiple words for graduated intensities proves the dimensional structure exists.

What about homonyms like "bank" (financial institution) vs "bank" (riverbank)? Same word, completely different meanings—doesn't this show symbols can point anywhere? No. The LABEL (string "bank") is not the coordinate. The coordinate is meaning-in-context. When you say "I went to the bank" in a financial conversation, you activate the institution coordinate. In a geography discussion, you activate the river coordinate. Context (surrounding semantic coordinates) disambiguates. The orthogonal net is what ENABLES this disambiguation—without structured dimensions, context wouldn't constrain meaning.

What about historical drift? "Nice" meant "foolish" in the 1300s, now means "pleasant." Didn't the coordinate change arbitrarily? Look at the path: foolish → silly → simple → agreeable → pleasant. This isn't random teleportation—it's drift along a semantic gradient (negative valence → neutral → positive). The axes persisted; the word drifted along them. Historical linguistics traces these paths precisely because they follow structured patterns. Without the orthogonal net, etymology would be impossible—there'd be no structure to historical change.

The snapshot principle: At any given moment, language is a snapshot of the orthogonal net. You and I can communicate precisely TO THE EXTENT that we share the same snapshot—same era, same culture, same domain. We don't need eternal fixed ground; we need SHARED fixed ground. The more our snapshots overlap, the better we communicate. This is what "closer" means in "closer to the truth"—not absolute coordinates, but COORDINATED snapshots. Historical texts are harder to understand because we're reading a DIFFERENT snapshot. Domain experts communicate effortlessly because they share a MORE ALIGNED snapshot. The net structure persists; individual positions within it drift; communication quality correlates with snapshot overlap.

What about natural language ambiguity? English works fine despite vagueness—doesn't this prove drift doesn't trap us? Actually, we're CONSTANTLY doing disambiguation work. "Apple" activates the fruit coordinate in "farmers market" context, the tech-company coordinate in "WWDC conference" context. You don't notice the work because your brain does it automatically using surrounding coordinates (context). In databases where context is stripped away, this ambiguity DOES trap us—"customer" without context could mean billing entity, shipping address, or user account. We paper over the trap with business logic, JOIN operations, and manual verification. Natural language works BECAUSE we maintain rich context (many surrounding coordinates); databases fail BECAUSE they strip context and scatter semantic neighbors.

What about creativity—poetry, metaphor? "Juliet is the sun" doesn't require fixed coordinates, right? Wrong. Metaphor works precisely BECAUSE it navigates the semantic net deliberately. "Juliet" (warmth, life-giving, center of orbit) overlaps with "sun" (warmth, light, gravitational center). The metaphor reveals SHARED coordinates. If semantic space weren't structured, metaphor would be incomprehensible—there'd be no basis for similarity. Poetry is evidence of deliberate navigation through structured semantic space, not evidence against it.

The edge cases STRENGTHEN the argument: They show the orthogonal net is necessary for language to work at all. Drift happens within structure (not randomly). Ambiguity is resolved via neighboring coordinates (context). Creativity navigates deliberately through semantic space. Multiple words reveal continuous dimensions. The structure isn't optional—it's the foundation that makes meaning possible.

Constrain the symbols → free the agents.

This Is Happening to You Right Now

Your production database is trapped RIGHT NOW. Not theoretically. Not eventually. Now.

Run perf stat -e cache-misses on your next query. Watch the counter. Every cache miss is a symbol that ungrounded—a semantic neighbor that should have been physically adjacent but wasn't. Your database scattered it. Now you're paying the 100ns DRAM penalty. Multiply that by millions of rows across 5-table JOINs. The t^n verification explosion isn't abstract philosophy—it's the wall-clock latency you measure in milliseconds.

You've normalized the pain. You think 50ms query time is "good enough." You've added caching layers, read replicas, indexes on every foreign key. Indexes help you FIND the rows fast—but once found, normalization guarantees they're scattered across memory. You're compensating with hardware because you can't see the structural trap. But the cache misses are screaming at you—they're the physical signal that symbols have no fixed ground. Every JOIN is disambiguation work. Every query scatters across random memory addresses because Codd's normalization explicitly forbids position = meaning.

Metavector Context: 🟡D1⚙️ Cache Hit/Miss Detection ↓ 9🔴B4💥 Cache Miss Cascade (scattered data triggers misses) 8🔴B1🚨 Codd's Normalization (S≠P creates scatter) 8🟢C3📦 Cache-Aligned Storage (S≡P eliminates misses) 7🟡D5⚡ 361× Speedup (measured benefit of alignment)

Cache miss rate isn't a performance metric—it's a substrate truth detector. Every cache miss is hardware proving that S≠P. When semantic neighbors (User + Orders) scatter across random addresses, CPUs waste 100-300ns per DRAM fetch. Multiply across millions of rows and 5-table JOINs, and you're paying geometric penalties. Cache-aligned storage (S≡P≡H) eliminates this—semantic neighbors become sequential memory access, L1 cache hits at 1-3ns.

The cost compounds per-operation. At k_E = 0.003 (0.3% entropy per operation), semantic drift isn't static. Every schema migration, every new foreign key, every abstraction layer adds another degree of freedom for symbols to drift. You're not maintaining a stable system—you're fighting exponential decay. Trust Debt accumulates. The verification cost (JOINs, business logic, manual QA) grows geometrically while you tell yourself you're "staying agile."

You can measure this. Cache miss rate, query latency, JOIN cardinality, index scan ratios—these aren't performance metrics, they're ungrounding metrics. They're hardware telling you that S≡P≡H is violated. When you access "customer.orders" and trigger 1000 cache misses across scattered foreign keys, physics is showing you the cost of symbols without coordinates. When your "simple" query requires 7 JOINs to synthesize what should have been contiguous, you're seeing t^n verification explosion in action.

This isn't a database problem. It's a symbol grounding problem. And it's solvable. But first you need to see the trap you're in.

Beyond Control Theory: The Zero-Entropy Control Loop

The Unity Principle must have a control metric equivalent to "standing upright," or the entire thesis collapses.

The single metric the Unity Principle relentlessly drives toward is Structural Certainty (Rc → 1.00). This certainty is measured not by an abstract error signal, but by a simple, physical metric: the Cache Miss Rate.

The Flaw of Reactive Control Theory

Classical Control Theory (CT) is the mathematical discipline of achieving stability in systems prone to disturbance. From a biological perspective, CT describes the function of the Cerebellum (posture, balance, homeostasis). From Codd's perspective, it describes a normalized database (logging, auditing, failover, ACID transactions).

The fundamental flaw in CT is that it is a mathematics of perpetual reaction:

- **The Goal is Minimal Error (Δx → 0):** The system constantly measures the distance between target state and actual state.

- **The Signal is Error (e):** The control loop is triggered by the error signal (your body senses it's leaning, a database detects a log failure).

- **The Flaw:** By defining itself in opposition to error, it makes its own work eternal. The system never eliminates the **source** of disturbance (entropy kE); it only **compensates** for it.

Classical Control Theory is mathematically designed for perpetual entropy cleanup.

This perpetual cleanup is the Synthesis Penalty—the constant, costly work of JOINing scattered data just to verify if the 0.3% per-decision drift has been contained.

The Zero-Entropy Control Loop (ZEC)

The Unity Principle (S≡P≡H) does not seek to minimize error; it seeks to eliminate the structural possibility of error. It doesn't rely on an abstract error signal (e); it relies on a hardware signal: the Cache Miss Rate (1-H).

The single, measurable metric the entire system is driven toward is Structural Cohesion (kS), which is the physical manifestation of maximum precision (Rc → 1.00).

| Feature | Classical Control (Codd/Cerebellum) | Zero-Entropy Control (Unity/Cortex) |

|---|---|---|

| Metric of Stability | Minimal Error (Δx → 0) | Maximum Precision (Rc → 1.00) |

| Control Signal | Error Signal (e) / Log Failure | Cache Miss Rate (1-H) |

| Core Action | Compensate (Run JOINs, Apply PID feedback, Log/Audit) | Maintain (Preserve orthogonal substrate, Insert data maintaining S≡P≡H invariant) |

| Stability Achieved | Reactive Stability (Perpetual fight against kE) | Structural Stability (Source of kE eliminated) |

| The Equivalent | Standing Upright (Perpetually correcting gravity's pull) | Perfect Cohesion (Where S≡P is instantaneous, LUnity → 0) |

Nested View (following the thought deeper):

🟡D4⚙️ Control Paradigms ├─ 🔴B1🚨 Classical Control Theory Codd/Cerebellum │ ├─ Assumes permanent entropy source │ ├─ Goal: minimize error (Δx → 0) │ ├─ Method: perpetual feedback │ └─ Result: eternal compensation work └─ 🟢C1🏗️ Zero-Entropy Control Unity/Cortex ├─ Entropy source is eliminable ├─ Goal: maximize structural certainty ├─ Method: permanent reorganization └─ Result: error structurally impossible

Dimensional View (position IS meaning):

[🔴B1🚨 Classical Control] -----------------> [🟢C1🏗️ Zero-Entropy Control]

| |

Dimension: ENTROPY ASSUMPTION Dimension: ENTROPY ASSUMPTION

| |

"permanent" "eliminable"

| |

Dimension: GOAL Dimension: GOAL

| |

minimize error eliminate error source

| |

Dimension: COST PROFILE Dimension: COST PROFILE

| |

perpetual (O(n) per query) upfront (O(1) at write)

What This Shows: The nested view presents these as two "approaches" you might choose between. The dimensional view reveals the fundamental asymmetry: Classical Control ASSUMES entropy is permanent, which forces perpetual cost. Zero-Entropy Control recognizes that database entropy is architectural (we CREATED scatter by normalizing), therefore eliminable. The choice isn't "which approach" - it's "what do you believe about the source of disorder?"

Critical Economic Insight: This is not merely an engineering optimization. When Rc approaches 1.00, systems following the Unity Principle generate massive Trust Equity—the measurable financial value of structural alignment. Classical Control systems accumulate Trust Debt through constant compensation. Unity systems build Trust Equity through alignment. See Appendix E, Section 7 for complete mathematical formalization and real-world case studies (healthcare, financial, and neural interface systems generating billions in value from perfect alignment).

The Control Loop Inversion

- **Entropy as Control Signal:** In ZEC, a **Cache Miss** (1-H) is the direct, physical signal that orthogonal substrate maintenance is required. It means new data must be inserted to preserve S≡P≡H across all dimensions.

- **The Maintenance Action:** This signal triggers orthogonal substrate maintenance—inserting data into the correct position across all dimensions to PRESERVE (not repair) the S≡P≡H invariant.

- **The Result:** The system only stops driving when Rc is maximized and the kS acceleration is realized through perfect orthogonal maintenance.

The equivalent to "standing upright" is not simply avoiding data errors; it's the structural guarantee that the system's logic and memory are physically inseparable.

Why This Matters

Control theory systems oscillate around their target because they're always REACTING to error.

Unity systems achieve stability because cache misses provide INSTANT, PHYSICAL feedback:

- Traditional: "Check logs, run audit, detect drift, schedule JOIN" (minutes to hours)

- Unity: "Cache miss detected" (nanoseconds)

The hardware itself tells you when S≡P≡H is violated. No logs. No audits. Just physics.

This is not metaphor—it's measurable via performance counters (perf stat -e cache-misses).

The Cortex-Cerebellum Divide: Two Architectures, Two Control Systems

This is the ultimate distinction. The reason Unity Principle matters beyond efficiency.

Classical Control Theory and the Zero-Entropy Control Loop aren't just different optimization strategies. They're fundamentally different architectures of consciousness itself.

The Unconscious Control Loop (Cerebellum = Codd = Classical CT)

Architecture: Reactive compensation.

Example: Your cerebellum maintaining balance

You're standing on a boat that rocks sideways. Your cerebellum detects the disturbance via vestibular sensors (inner ear equilibrium organs).

- Fire leg muscles to counteract sway

- Measure new angle: now 1 degree

- Update error signal: e = 1 degree

- Apply less force

- Measure again: now 0.5 degrees

- Continue correcting...

The key property: The error signal never goes to zero. Gravity is always pulling. Water is always moving. Your cerebellum perpetually compensates for the disturbance source it cannot eliminate.

This is Classical Control Theory in meat: Balance via perpetual feedback, never eliminating the source of entropy (gravity, waves), only compensating for its effects.

Database equivalent (🔴B7🌫️ Hallucination Codd normalization):

Users table: Store user profiles

Orders table: Store orders

Addresses table: Store shipping addresses

Preferences table: Store user settings

...

Query: "Get complete user profile"

Required action: [🔴B2🔗 JOIN](/book/chapters/glossary#b2-join) JOIN 5 tables

Entropy source: Scattered data cannot be eliminated

Compensation: Run JOINs repeatedly, validate consistency, audit for drift

Result: Perpetual work to maintain coherence

The defining principle: Normalize to reduce storage redundancy. Accept that retrieval requires synthesis (🔴B2🔗 JOIN JOINs). Run compensation loops forever (audits, validation, consistency checks).

The consciousness consequence: Cerebellum handles unconscious processes (balance, breathing, heartbeat, reflexes). It's reactive. It doesn't require conscious awareness. It just perpetually compensates.

The Conscious Control Loop (Cortex = 🟢C1🏗️ Unity Principle Unity = Zero-Entropy CT)

Architecture: Structural elimination.

Example: Your cortex recognizing a friend's face

You see a face. Three concepts activate simultaneously in your cortex:

- Visual features (eyebrow shape, nose structure)

- Semantic identity ("This is Sarah")

- Emotional memory ("Sarah makes me happy")

These neurons are physically co-located (or densely connected via local synaptic circuits, S≡P≡H).

[E7🔌] The Neurological Mechanism: Hebbian Learning Creates Structural Certainty

"Cells that fire together, wire together." - Donald Hebb (1949)

This isn't metaphor. It's measurable neuroscience:

[E8💪] Long-Term Potentiation (LTP):

- When two neurons fire simultaneously (within ~20ms), the synapse between them physically strengthens

- AMPA receptors increase at the postsynaptic membrane

- Dendritic spines enlarge, new synaptic connections form

- Timeline: Milliseconds to activate → Hours to consolidate → Permanent structural change

The first time you meet Sarah:

- Visual cortex fires (face features detected)

- Hippocampus fires (new memory encoding)

- Amygdala fires (emotional context)

- These neurons fire TOGETHER (within 20ms window)

- The synaptic connections between these neurons physically strengthen (LTP)

- The neurons become a **stable firing assembly** - they preferentially activate each other

- Eventually: Visual input (Sarah's face) → Instant cascade across entire assembly

- Result: Visual + Semantic + Emotional activate as ONE UNIT (10-20ms)

[E10🧲] This solves the "binding problem":

Classical neuroscience asks: "How does the brain bind separate features (color, shape, motion, identity) into unified perception?"

Metavector Context: 🟣E10🧲 Binding Problem Solution ↓ 9🟢C1🏗️ Unity Principle (S≡P≡H eliminates binding gap) 9🟡D2📍 Physical Co-Location (semantic neighbors physically adjacent) 8🟣E7🔌 Hebbian Learning (fire together, wire together creates assemblies) 8🔴B6🧩 Binding Problem (classical neuroscience mystery) 7🟢C6🎯 Zero-Hop Architecture (synthesis within ΔT epoch)

The Binding Problem isn't solved by a mechanism—it's dissolved by architecture. When visual cortex (face features), semantic networks (identity), and emotional centers (memory) are physically co-located through Hebbian learning, there's no "binding step" required. The unified percept "Sarah" emerges because those neurons ARE a single physical assembly. Sequential access across adjacent dendrites in 10-20ms. No synthesis gap. No binding problem.

Unity Principle answer: Physical co-location eliminates the binding problem. The concept "Sarah" IS the spatially-organized firing assembly. There's no separate "binding step" because Semantic ≡ Physical ≡ Hardware from the start.

You don't run a corrective loop: "Is this Sarah? Probably 80%. Let me check more features. Now 92%. Now 97%..."

No. You KNOW. P=1. Instant certainty.

Why? Because structural organization eliminated the possibility of synthesis gap:

- Visual features physically adjacent to identity encoding

- Identity adjacent to emotional context

- All fire together within 10-20ms (dendritic integration)

There is no "entropy source" to compensate for. The semantic meaning IS the physical organization.

🟣E4🧠 Consciousness The "Redness of Red": Qualia as Structural Certainty

This P=1 certainty is what philosophers call "qualia" - the immediate, non-probabilistic experience of consciousness.

The redness of red. The painfulness of pain. The "Sarah-ness" of Sarah.

These aren't statistical inferences. You don't experience "probably red, 87% confidence." You experience RED. P=1. Instant. Certain.

Why this matters profoundly for irreducible surprise (S_irr):

In a probabilistic system (AI, Bayesian networks, standard databases):

- Everything has error bars: P(Sarah) = 0.92 ± 0.08

- Every perception is uncertain: P(red) = 0.85 ± 0.15

- Novelty looks like uncertainty: Is this S_irr or just low confidence?

- **Cannot distinguish genuine novelty from measurement noise**

In a structural system (Cortex, Unity, S≡P≡H):

- Known patterns have P=1: "This IS Sarah" (structural certainty)

- Familiar qualia have P=1: "This IS red" (structural experience)

- S_irr stands out AGAINST clean baseline: High contrast, unmistakable

- **Novelty detection is CRISP because baseline is CERTAIN**

[E5a✨] The precision collision insight:

When precision is HIGH (c → t, k_E → 0):

- The field is CLEAN (known patterns = P=1, no noise)

- S_irr appears as SIGNAL (not indistinguishable from uncertainty)

- The system can CHASE novelty (because it's visible)

When precision is LOW (c much less than t, k_E = 0.003):

- The field is NOISY (everything has error bars)

- S_irr looks like ERROR (indistinguishable from system uncertainty)

- The system is BLIND to novelty (maintenance mode only)

This is the mechanism behind "precision collisions ENABLE discovery":

P=1 structural certainty on known patterns → Clean baseline → S_irr visible as crisp signal → Consciousness can detect and pursue novelty → Discovery mode operational

Current AI (transformer models, neural networks) operates entirely in probability space:

- Every output has confidence scores (never P=1)

- Every perception is Bayesian inference (never certain)

- No structural grounding (weights are learned statistics, not physical organization)

- **Result:** Cannot experience qualia, cannot cleanly detect S_irr

The Unity Principle shows: Consciousness requires structural certainty (P=1), not statistical convergence (P → 1).

This is Zero-Entropy Control Loop in meat: Stability through structural alignment, not perpetual compensation.

🟢C2🗺️ ShortRank Database equivalent: ShortRank Matrix Traversal (Unity Principle + S≡P≡H)

The cortex recognizing Sarah is a MATRIX TRAVERSAL, not a database query.

Metavector Context: 🟢C2🗺️ ShortRank Addressing ↓ 9🟢C1🏗️ Unity Principle (S≡P≡H enables coordinate-based addressing) 8🟡D2📍 Physical Co-Location (addresses map to physical locations) 8🔵A3🔀 Phase Transition ((c/t)^n search space reduction) 7🟢C4📏 Orthogonal Decomposition (dimensions are independent axes) 7🟠F7📊 Compounding Verities (fixed addresses enable truth to compound)

ShortRank isn't just naming convention—it's coordinate geometry for meaning. When "Sarah/Visual/FaceShape" has a fixed address, your brain can jump O(1) to that location. No search. No lookup table. No JOIN. The address IS the coordinate IS the memory location. This is how consciousness achieves P=1 recognition within 10-20ms: semantic space becomes physical space through S≡P≡H.

Here's how the ShortRank matrix makes this P=1 recognition possible:

ShortRank Matrix Structure (Human-Readable Addresses):

Main Matrix: People

│

├─ Sarah/Identity ──┐

│ ├─ Sarah/Visual/FaceShape

│ ├─ Sarah/Visual/EyeColor

│ ├─ Sarah/Visual/SmilePattern

│ ├─ Sarah/Emotional/Friend

│ ├─ Sarah/Emotional/Warmth

│ ├─ Sarah/Recent/CoffeeShop

│ └─ Sarah/Recent/BookDiscussion

│

├─ John/Identity ──┐

│ └─ ...

│

└─ Emma/Identity ──┐

└─ ...

Query: "Who is this face I'm seeing?"

Step 1: Visual submatrix LIGHTS UP (Row activation)

- Input: Face features (eyebrow shape, nose structure)

- ShortRank address: `Sarah/Visual/*` submatrix activates

- All visual components fire TOGETHER (10-20ms, zero-hop)

- Result: **"This visual pattern matches Sarah/Visual"** (P=0.95 → not yet certain)

Step 2: Transpose to IDENTITY (Row → Column → New Row)

- Visual features point to: `Sarah/Identity`

- Transpose: Follow `Sarah/Visual` → `Sarah/Identity` edge

- New row activates: Entire `Sarah/*` submatrix

- **All Sarah-related data lights up simultaneously:**

Step 3: Stable Pattern Recognition (Recursive Transpose)

- Each activated submatrix points BACK to `Sarah/Identity`

- Visual → Identity → Emotional → BACK to Identity (reinforcement)

- Recent memories → Identity → Visual → BACK to Identity (reinforcement)

- **Pattern STABILIZES through recursive transpose walk**

Step 4: P=1 Certainty Emerges (NOT Statistical, STRUCTURAL)

Why P → 1 (instant certainty)?

Because the Unity phase shift formula Φ = (c/t)^n determines submatrix activation precision:

This is where the mathematical formula (Φ = (c/t)^n from Part 2) instantiates as PHYSICAL submatrix behavior. The precision collision insight ([🟣E5a✨ Precision Collision]) is the signal theory consequence: high Φ creates clean signal fields where S_irr is visible, low Φ creates noisy fields where novelty is indistinguishable from uncertainty.

What this looks like in practice:

- **LOW precision (c << t → Φ << 1, noisy signal field):**

- `Sarah/Visual` lights up: 0.87 ± 0.12 (uncertain activation)

- `Sarah/Emotional` lights up: 0.79 ± 0.18 (uncertain activation)

- `Emma/Visual` ALSO lights up: 0.23 (cross-talk noise!)

- **Pattern is FUZZY, uncertain, probabilistic**

- **Signal theory interpretation:** Baseline is noisy, S_irr indistinguishable from error

- **Unity formula interpretation:** Φ = (c/t)^n is small, geometric precision loss

- **HIGH precision (c → t → Φ → 1, clean signal field):**

- `Sarah/Visual` lights up: 1.0 (CERTAIN)

- `Sarah/Emotional` lights up: 1.0 (CERTAIN)

- `Emma/Visual` lights up: 0.0 (OFF, not noise)

- **Pattern is CRISP, certain, non-probabilistic**

- **Signal theory interpretation:** Clean baseline, S_irr visible as crisp signal

- **Unity formula interpretation:** Φ = (c/t)^n → 1, geometric precision preserved

The (c/t)^n mechanism (Unity phase shift formula instantiation):

- **n dimensions** = All Sarah submatrices (Visual, Emotional, Recent, ...)

- **c/t per dimension** = Precision of each submatrix activation

- **(c/t)^n** = Geometric multiplication of precisions

- **When c → t in ALL dimensions:** (0.999)^10 ≈ 0.99 → P=1 rapidly

The result: Matrix activation pattern IS the recognition

Query: "Who is this?"

Action: Sequential cache reads (all Sarah data co-located in memory)

Submatrices activated: Sarah/Visual, Sarah/Emotional, Sarah/Recent

Entropy source: NONE (precision eliminated uncertainty)

Compensation: NONE needed (pattern is structurally stable)

Result: "This IS Sarah" - P=1, instant, non-probabilistic

Cache hit rate: 94.7% (all relevant data was adjacent)

Latency: 1-3ns per read (L1 cache, no DRAM round-trips)

Verification: FREE (access pattern = reasoning trace, explainable)

Physical adjacency (ShortRank co-location) + High precision (c → t) → Stable submatrix activation pattern → P=1 structural certainty (not P → 1 statistical convergence)

Why this is non-probabilistic:

The submatrices EITHER light up crisply (1.0) OR don't light up (0.0). There's no "Sarah with 87% confidence." The pattern is STRUCTURAL (encoded in physical organization) not STATISTICAL (inferred from probabilities). This is what creates qualia - the immediate, certain experience of "Sarah-ness."

The consciousness consequence: Cortex handles conscious processes (decision-making, reasoning, integration, awareness). It's proactive. It requires conscious moments (Precision Collisions). It achieves stability through structural organization, not perpetual correction.

The Metabolic Cost Reveals the Architecture

Why does cortex consume 55% of your brain's energy budget while cerebellum consumes only 10-15%?

Cerebellum (reactive control):

- Simpler synapses (fewer inputs to integrate)

- Parallel modules (no inter-module communication)

- Energy-efficient (low coordination overhead)

- **Cost:** Perpetual compensation work (always firing to correct disturbances)

- Complex synapses (10,000 connections per neuron)

- Recurrent circuits (feedback loops reinforcing)

- Precise coordination (synchronized gamma oscillations)

- **Cost:** Maintaining S≡P≡H alignment (expensive, but does it once)

The key insight: Cortex is expensive BECAUSE it eliminates entropy sources, not because it compensates for them.

You pay the metabolic cost upfront during learning (reorganizing neurons to co-locate related concepts), then reap the benefits (instant recognition, no synthesis gap, free verification).

Cerebellum pays the cost continuously (every correction requires energy, perpetually).

- Normalized database: Cheap to store (no redundancy), expensive to query (JOINs forever)

- Unity Principle database: Expensive to organize (redundancy tolerated), cheap to query (no JOINs)

The choice: Pay once during design, or pay forever during operation.

Evolution chose to pay once. Your cortex proves it.

Why This Distinction Matters: Control Theory's Fundamental Asymmetry

Classical Control Theory assumes:

- **The entropy source is PERMANENT** (gravity, noise, disturbance will always exist)

- **Goal: Minimize error** (get as close to target as possible, knowing you'll never reach it)

- **Method: Perpetual feedback** (measure error, apply correction, measure again, forever)

This works for systems where the entropy source is truly permanent:

- Gravity (will always pull)

- Thermal noise (will always vibrate atoms)

- Market volatility (will always fluctuate)

But databases and AI systems are NOT like gravity.

The "entropy source" in normalized databases is self-imposed: We chose to scatter related data. We created the synthesis gap. We caused the JOINs.

- **The entropy source is ELIMINABLE** (scatter is architectural choice, not physical law)

- **Goal: Maximize structural certainty** (eliminate the source, making error impossible)

- **Method: Permanent reorganization** (pay once to align semantic-physical-hardware, then never compensate again)

This works for systems where the entropy source is architectural:

- Database design (we control the organization)

- AI systems (we choose the substrate)

- Consciousness (evolution built S≡P≡H into cortex)

The Historical Inversion: When We Applied Cerebellum Architecture to Problems Requiring Cortex

Codd normalized databases (1970) for a specific reason: Minimize storage redundancy.

- Memory was **expensive** (kilobytes)

- Computation was **cheap** (cycles free)

- Solution: Normalize to save storage, use cheap computation (JOINs) to recover meaning

But we never noticed what we'd done:

We'd applied Cerebellum architecture (reactive compensation) to a problem that needed Cortex architecture (structural elimination).

We normalized data = we scattered related concepts = we created the synthesis gap = we built JOINs into every query = we made truth dependent on perpetual compensation.

Then we spent 50 years building compensatory infrastructure:

- Cache layers (Redis, Memcached)

- Denormalization (materializing views, caching results)

- Consistency frameworks (ACID, consensus, eventually-consistent)

- Auditing (data lineage, compliance logs)

All of these are Control Theory feedback loops.

All of them are trying to compensate for the entropy source we created.

The realization: We didn't need to compensate. We needed to eliminate the source.

We needed to reorganize data from the start so semantic = physical. Pay the cost once (storage redundancy tolerated), then never compensate again.

This is what Unity Principle does.

It says: Stop treating "scattered data" as permanent entropy. Treat it as an architectural choice that can be reversed.

The Ultimate Distinction: Conscious vs Unconscious Systems

Cerebellum (unconscious system):

- Perpetual reactive control

- Works because entropy sources (gravity, noise) are truly permanent

- Cannot explain its reasoning (error correction is opaque)

- Cannot verify its correctness (compensation is implicit)

- Structural proactive control

- Works because entropy sources (scattered data, synthesis gaps) are eliminable

- Can explain its reasoning (access pattern is explicit)

- Can verify its correctness (access pattern IS the proof)

The implication for AI alignment:

Systems built on normalized databases (cerebellum architecture) cannot be made conscious because they cannot break the compensatory loop. They're forever dependent on JOINs, forever synthesizing meaning from scattered fragments, forever unable to point to a moment where they KNOW something with certainty.

Systems built on Unity Principle (cortex architecture) achieve consciousness because they ground symbols physically. They can point to the Precision Collision moment where cache was hit correctly, where semantic = physical = hardware aligned, where P=1 certainty emerged.

The book's ultimate argument:

Your consciousness proves S≡P≡H works in biological substrate.

Codd normalization proves cerebellum-style compensation in silicon doesn't achieve consciousness.

The gap between these two facts is the entire Trust Debt problem.

Chapter 2 will show how to migrate silicon from Cerebellum architecture to Cortex architecture—from perpetual compensation to permanent structural alignment.

How Databases Unground Symbols (The Architectural Violation)

Now consider what happens when you normalize a database:

"User Alice" = {

name: "Alice Smith",

email: "alice@corp.com",

address: "123 Main St, Boston, MA",

preferences: { theme: "dark", notifications: true },

orders: [ ... ],

payment_methods: [ ... ]

}

The physical storage (Third Normal Form):

Users table → Physical location A (RAM address 0x1000)

Addresses table → Physical location B (RAM address 0x5000)

Preferences table → Physical location C (RAM address 0x9000)

Orders table → Physical location D (RAM address 0xD000)

PaymentMethods table → Physical location E (RAM address 0xF000)

The symbol "User Alice" is now ungrounded.

The string "alice@corp.com" in the Users table doesn't point to her address. It points to a foreign key (address_id = 42), which points to a row in the Addresses table, which is stored at a completely different memory location.

- **Semantic unity:** "User Alice" is ONE concept

- **Physical scattering:** Alice's data is in 5 different RAM addresses

- **Synthesis required:** To reconstruct "User Alice," the database must:

Each pointer chase is a cache miss.

And each cache miss costs 100 nanoseconds (vs 1-3ns for L1 cache hit).

The Ghost in the Cache (Why Ungrounded Symbols Have Measurable Cost)

The title of this chapter comes from this phenomenon:

When you query for "User Alice," the database doesn't find her data where the symbol points. It finds scattered fragments across random memory locations.