Conclusion: Fire Together, Ground Together

Chapter Primer

- Hebbian wiring completion (Fire Together, Ground Together as literal substrate physics)

- Your identity transformation through the book (Victim → Builder → Evangelist → Embodiment)

- The measurement making you complicit (once you see drift, you can't unsee it)

- Four neural updates mapping to the journey (recognition, mechanism, application, evangelism)

By the end: You'll recognize you became the proof by reading this—substrate evidence that Unity Principle propagates through physical matter, including your 1.4kg of electrochemical substrate.

Spine Connection: The Villain (🔴B8⚠️ Arbitrary Authority—the reflex) is no longer invisible to you. Control theory, the cerebellum, the scrim—you can name them now. The Solution (the Ground) is no longer abstract: S≡P≡H (🟢C1🏗️ Unity Principle), position = meaning, semantic neighbors as physical neighbors (🟡D2📍 Physical Co-Location). You're no longer just the Victim—you've transformed: Victim → Builder → Evangelist → Embodiment. The roles from the Preface have resolved. You ARE substrate evidence the physics works. Fire Together, Ground Together.

The Identity Transformation Is Complete—You've Become Substrate Evidence

You opened this book one way. You're closing it another. Not because we convinced you of something new, but because we gave you explicit coordinates for what you've been navigating implicitly your entire life. Dimensional collapse. Trust Debt. Flow states. Cache miss cascades. Substrate mismatch. These aren't concepts you learned—they're physics you've been experiencing, now measurable.

Something shifted while you read this book. Chapter 1 hit you with thirteen tradeoffs that weren't really separate problems—just different angles on dimensional collapse. Chapters 2-4 gave you formulas (PAF = ΔP / ΔT) for what your gut already knew. Chapters 5-6 handed you tools: ShortRank, RangeFit, drift ledgers. Chapter 7 showed you how one evangelist becomes a hundred through N² cascade.

You've completed Hebbian wiring: Fire Together, Ground Together. Your hippocampus detected patterns (these problems converge). Your cortex grounded them (substrate physics, measurable coordinates). The synaptic weights shifted. From "why does everything feel like a tradeoff?" to "dimensional collapse is the tension, and here's how to navigate it."

This chapter synthesizes your journey. From victim of framework illiteracy (buffeted by forces you couldn't name) -> Builder (armed with formulas, building substrate-aware systems) -> Evangelist (telling someone, watching N squared growth) -> Embodiment (you ARE substrate evidence C1 Unity Principle works).

Nested View (following the thought deeper):

🟤G1🚀 Identity Transformation ├─ 🔴B1⚠️ Victim (Introduction) │ ├─ Buffeted by unnamed forces │ └─ Frameworks gaslighting you ├─ 🟢C5🏗️ Builder (Chapters 5-6) │ ├─ Armed with formulas │ └─ Building substrate-aware systems ├─ 🟤G7🚀 Evangelist (Chapter 7) │ ├─ Telling colleagues │ └─ 🟣E9🔬 N-Squared Cascade activation └─ ⚪I1♾️ Embodiment (Conclusion) ├─ You ARE the proof └─ 🟣E1🔬 Substrate Evidence complete

Dimensional View (position IS meaning):

[🔴B1 Victim] ------> [🟢C5 Builder] ------> [🟤G7 Evangelist] ------> [⚪I1 Embodiment]

| | | |

Dim: Agency Dim: Agency Dim: Agency Dim: Agency

| | | |

Buffeted by Wielding Propagating BEING the

forces formulas truth proof

| | | |

Position: 0 Position: 1 Position: 2 Position: 3

(no control) (self-control) (influence others) (you ARE it)

Not sequential stages - positions on a single AGENCY dimension.

Each position includes all previous positions as components.

What This Shows: The nested view presents transformation as sequential stages you pass through. The dimensional view reveals these are positions on an AGENCY axis where each higher position CONTAINS all lower positions. You don't stop being a 🟢C5🏗️ Builder when you become an 🟤G7🚀 Evangelist—you embody Builder-ness from a new coordinate. The ⚪I1♾️ Embodiment position is maximally dense because it integrates all prior positions.

The measurement makes you complicit. Once you can see the ~0.3% drift that natural experiments reveal, every normalized schema becomes visible waste. Once you know flow states = S≡P≡H compliance, every grinding meeting becomes measurable substrate violation. This conclusion doesn't just summarize the book—it shows you became the proof by reading it.

The splinter in your mind now has coordinates. In the Preface, Morpheus described it as a feeling you couldn't name. Now you know: it's the recognition that P=1 certainty exists (you've felt it—flow states, insights, grounded knowing) but your scattered architecture prevents it (forcing P<1 synthesis across normalized tables, probabilistic AI, scattered contexts). The splinter isn't in your mind. It's in the gap between the substrate you ARE (S≡P≡H optimized over 500 million years) and the systems you BUILD (S≠P normalized for 50 years). Understanding doesn't remove it. S≡P≡H removes it.

The Unity Principle: One Pattern, All Manifestations

Before we trace your transformation, let's restate the core mechanism that explains everything you've experienced:

position = parent_base + local_rank × stride

This compositional nesting operates recursively at ALL scales. It's not metaphor—it's the same pattern whether you're looking at:

- **Database rows:** ShortRank positions define semantic meaning by parent sort

- **Cache lines:** L1 locality = Grounded Position via physical binding (S≡P≡H IS position, not proximity)

- **Neural firing:** Synchronized activations = integrated conscious experience

- **Qualia detection:** "I am certain about THIS right NOW" = precision collision event

- **Survival fitness:** Systems that detect alignment faster outcompete those that don't

Cache physics, P=1 precision events, the redness of red qualia, organizational survival—they're all manifestations of this single structural principle. The substrate doesn't care what you call it. Grounded Position IS meaning, defined by parent sort, physically bound via Hebbian wiring and FIM. The brain does position, not proximity. Coherence is the mask. Grounding is the substance.

The FIM Artifact: Position = Meaning Made Visible

Want to see the Unity Principle? Not as metaphor, but as physical substrate?

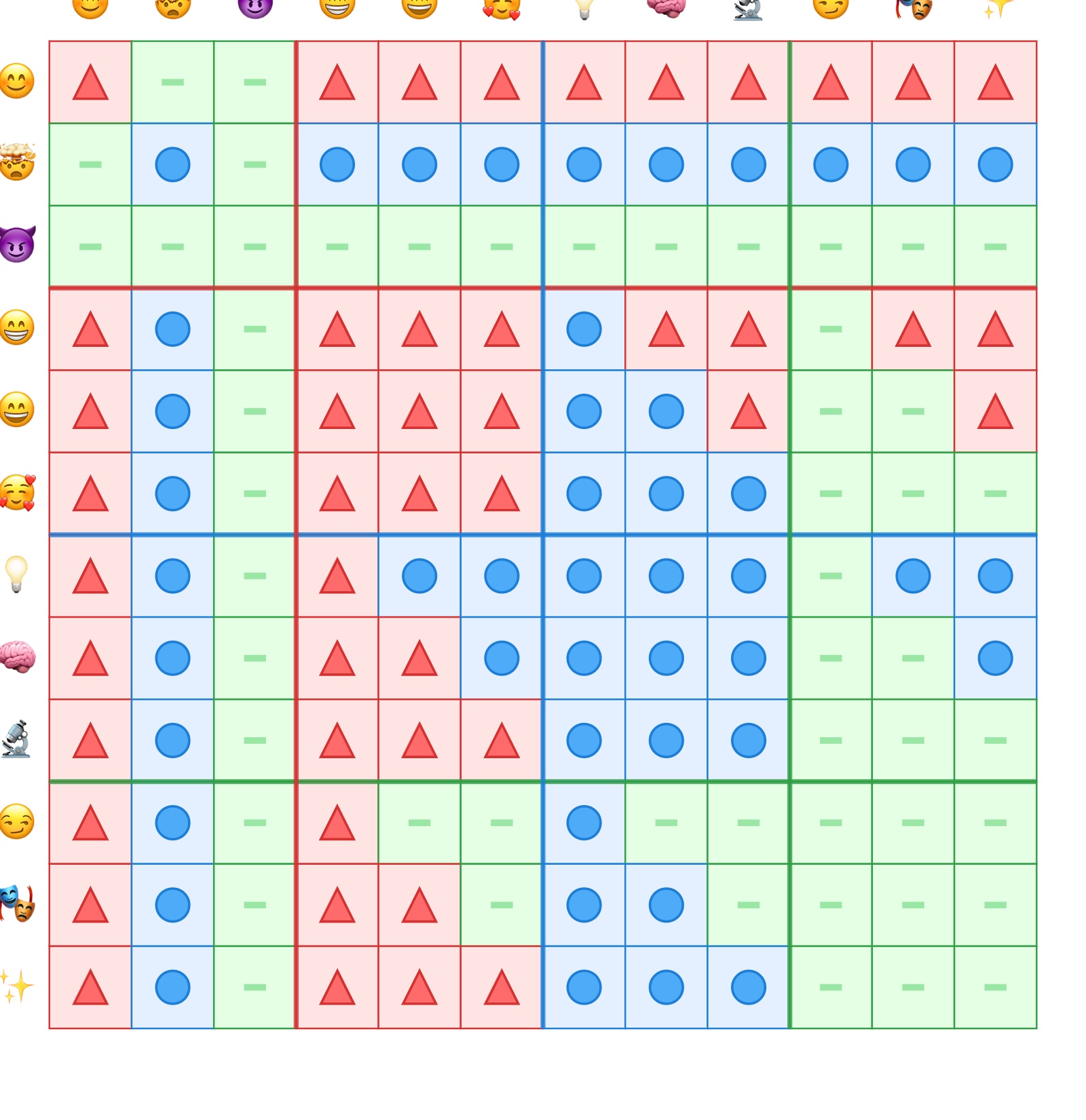

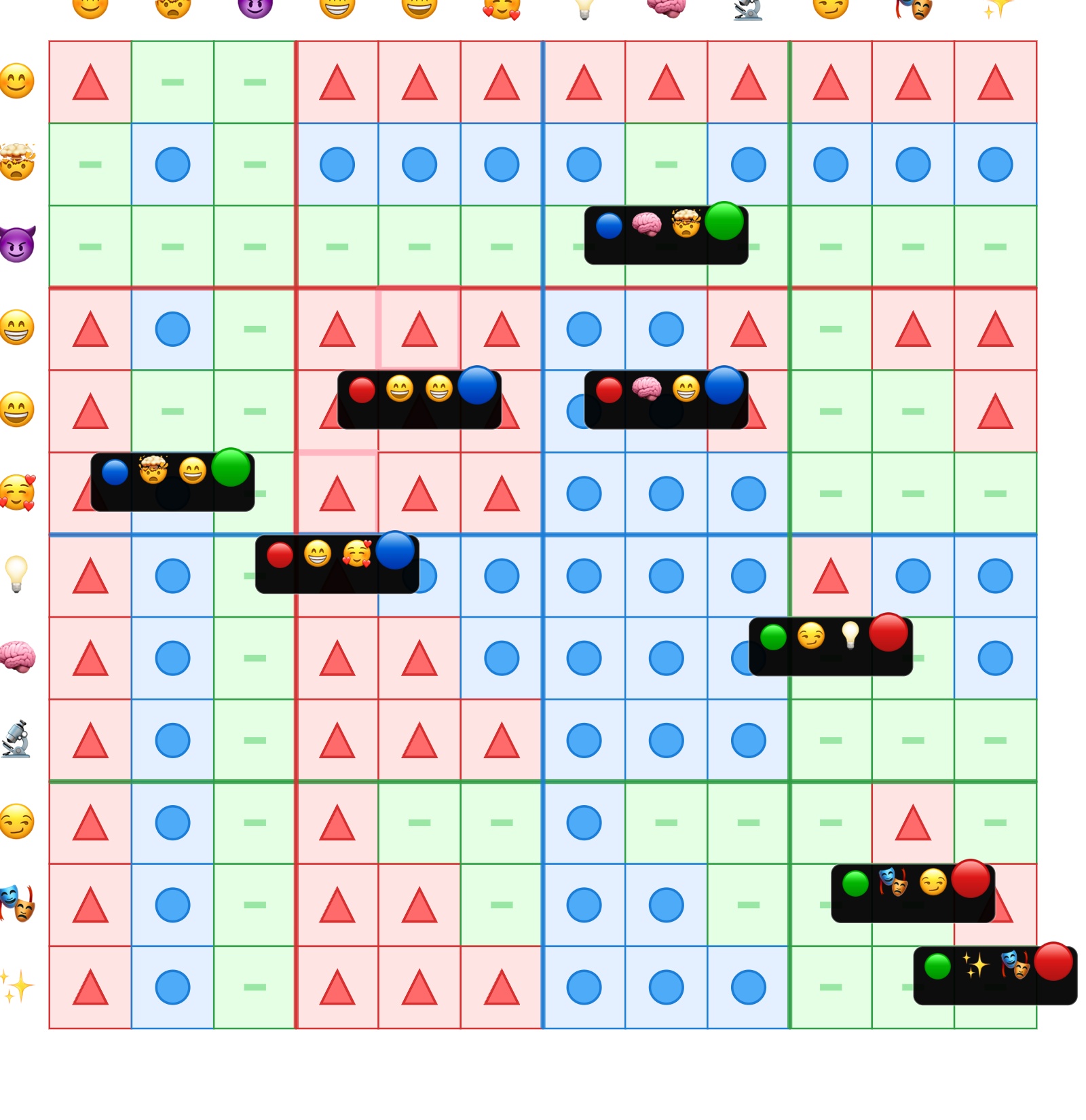

The FIM (Fractal Identity Map) artifact is a 12×12 matrix you can hold in your hands—144 cells, each in one of 3 discernible states (Pure P, B, or S). This isn't data visualization. It's gestalt compression: the difference between the "universe" of all possible patterns and the "thought" you can read at a glance.

What you're seeing:

- **Block (1,1):** The 3×3 category matrix (generator pattern)

- **Fractal identity:** Cell (1,1)=P generates Block (2,2)=All Pure P

- **Tetris L-pattern:** Mixed blocks show asymmetric composition

- **Mirrored transpose:** Off-diagonal cells swapped across main diagonal

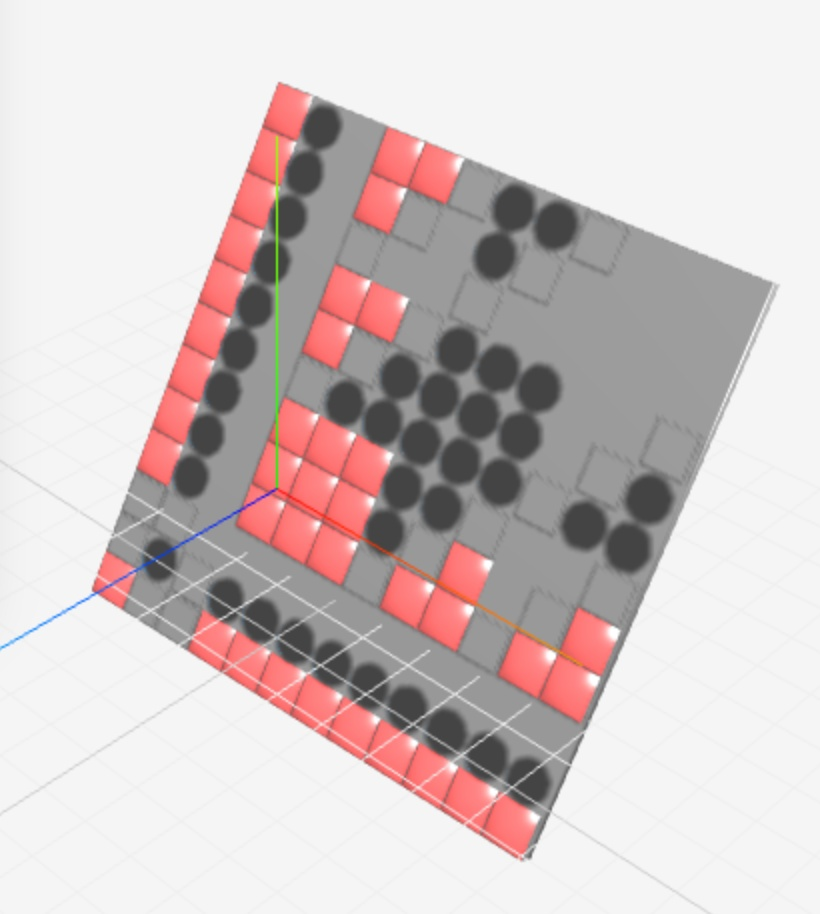

Now see it in 3D—semantics become physics:

The artifact as topographical landscape: Pyramids (sharp peaks), Bumps (rounded domes), Smooth (flat plains). Indented cells (valleys) show "surface tension"—ambiguous states that want to resolve to adjacent textures. At 15mm cell size with 0.5mm depth variation, blind tactile recognition is possible.

The artifact as topographical landscape: Pyramids (sharp peaks), Bumps (rounded domes), Smooth (flat plains). Indented cells (valleys) show "surface tension"—ambiguous states that want to resolve to adjacent textures. At 15mm cell size with 0.5mm depth variation, blind tactile recognition is possible.

The 2D texture map is abstract—you're "reading" patterns. The 3D topography is physical substrate—you're navigating terrain.

This transforms the perceptual task:

- **2D:** "I see red triangles clustering in the upper-right"

- **3D:** "The plateau of pyramids is eroding into a valley at the boundary with smooth plains"

Drift becomes geological change:

- Not "5% deviation in metric X"

- But "the cliff edge (line of indented cells) is moving westward at 2 cells per frame"

You're reading the vector and identity of drift, not just its magnitude. The indented cells are literal "phase transitions"—where one terrain (semantic category) is yielding to another. The shape of that boundary, and which direction it's moving, tells you what kind of drift is happening and where it's coming from.

This is the Unity Principle made tangible: semantic categories (P, B, S) are physical topology are hardware you can touch.

Why this matters: The Universe vs The Thought

The "universe" of all possible 12×12 patterns with 3 states per cell:

- **Total configurations:** 3^144 ≈ **10^68** (a 1 followed by 68 zeros)

- This is the combinatorial space of every pattern the matrix *could* display

But you don't process all 10^68 possibilities. You recognize meaningful patterns at a glance.

A "7-flip chunk" (7 cells that changed from their canonical state):

- **Information content:** Each flip = log₂(144 cells × 2 new states) ≈ 8.17 bits

- **Seven flips:** 7 × 8.17 ≈ 57.19 bits of information

- **Configurations represented:** 2^57.19 ≈ **10^17** (a 1 followed by 17 zeros)

- **Universe (10^68):** All possible atomic configurations of the matrix

- **Thought (10^17):** The "face" or "expression" you can recognize and act on

- **Your readable chunk is 10^51 times smaller than the total possibilities**

But here's the leap: What if those 7 flips can be recognized spatially—not counted sequentially, but felt as one pattern, the way you recognize "surprise" on a face?

That's gestalt processing:

- 144 individual cells compressed into **1 perceptual unit**

- 57 bits encoded as **one "micro-expression"** (skepticism, conflict, commitment)

- Parallel processing (visual cortex) **10x lower energy** than serial (prefrontal cortex)

- **Intuitive control:** "Nudge" 7 cells at once, watch cascade propagate (not programming—navigating)

- **Collaborative consensus:** Team sees same "face," reaches agreement at perception speed

- **AI alignment dashboards:** Operators "feel" model certainty/confusion/conflict in real-time

The Unity Principle prediction: Systems that interface at the speed of perception (gestalt, parallel) will outcompete systems requiring sequential translation (analysis, serial) by 150x in decision speed and 10x in metabolic energy.

When you can "read a database like a face," you've achieved S≡P≡H at the interface level.

The filtering analogy: You filter the "universe" (10^68 possibilities) down to a "language" (10^17 meaningful expressions). Just like faces: all possible pixel combinations vs the expressions we can recognize and respond to.

Full details: See Appendix C, Section 9: The FIM Artifact for combinatorics, information theory, and implications for intuitive interfaces.

The Grammatical Lever: How Position Multiplies Meaning by 144×

The artifact's power isn't in the content of each cell (whether it's P, B, or S)—it's in the position: the "grammatical slot" that cell occupies.

A "bag" of 144 symbols is a word cloud—low meaning, no structure.

A "sentence" with 144 slots is the FIM—the same symbol means different things in different positions:

- Symbol P in Block (1,1) cell (1,1) = "Category generator"

- Symbol P in Block (2,2) = "Pure P state, inherited from category"

- Symbol P in Block (3,2) = "Split state, P is minority texture (Tetris L)"

When you combine symbol (what) and position (where), possibilities multiply:

$$\text{Total Nuances} = (\text{Symbols}) \times (\text{Positions})$$

- **Positions:** 144 unique cells

- **Symbols:** 3 discernible states (P, B, S)

- **Total nuances:** 3 × 144 = **432 distinct meanings**

But information content adds (not multiplies) logarithmically:

$$\text{Total Information} = \log_2(\text{Symbols}) + \log_2(\text{Positions})$$

- Info(Symbol): log₂(3) ≈ 1.6 bits (which state?)

- Info(Position): log₂(144) ≈ 7.2 bits (which cell?)

- **Total per cell-event:** 1.6 + 7.2 ≈ **8.8 bits**

The 7.2-bit positional lever is 4.5× stronger than the 1.6-bit symbol itself.

This proves: you're reading the grammar (position, relationships, fractal nesting), not the "vocabulary" (which texture). The position's contextual signal dominates.

Above 3 states, the symbol starts competing with the position for cognitive bandwidth:

- 5 states: log₂(5) ≈ 2.3 bits per symbol (position only 3× stronger)

- 10 states: log₂(10) ≈ 3.3 bits per symbol (position only 2× stronger)

At 3 states, the position (7.2 bits) overwhelmingly dominates symbol (1.6 bits), keeping the grammar legible.

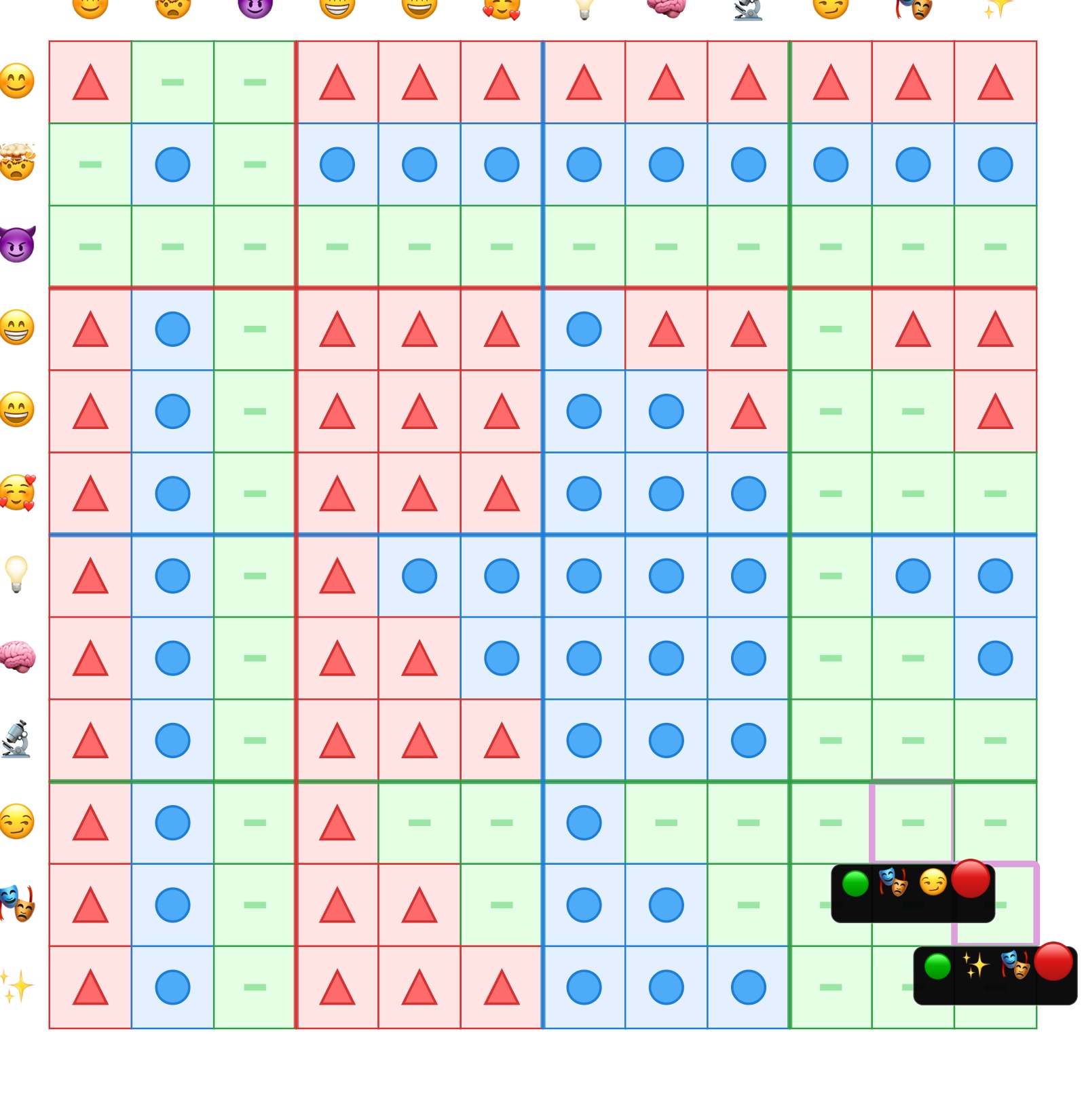

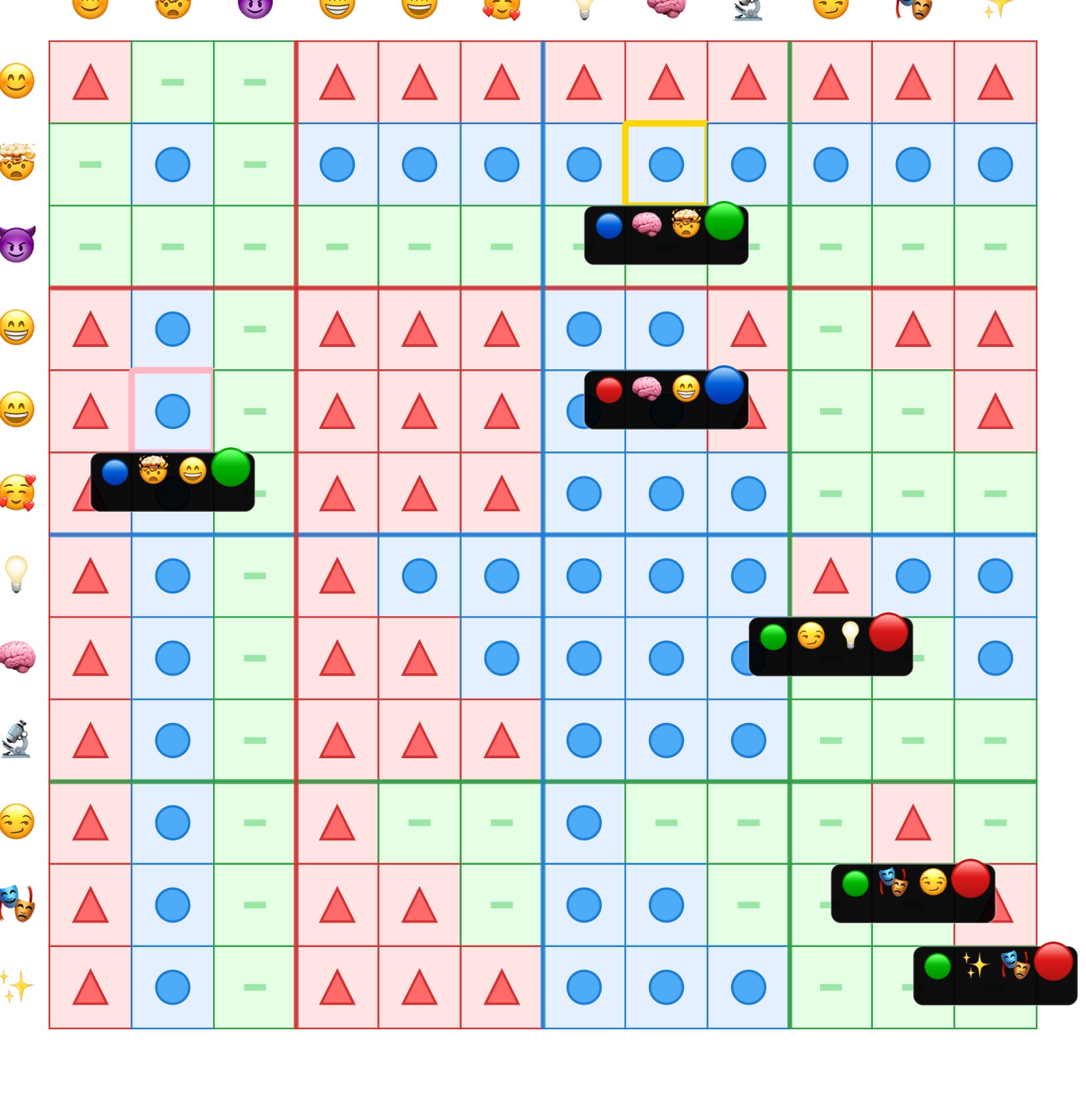

FIM Animation: The 4-Frame Story with 100× Universe Precision

Temporal Precision: Seconds Per Universe Age

The artifact's real power emerges when the map moves. Drift isn't a static snapshot—it's a sequence, a "sentence" told across frames.

The target precision: Pick a single second in the age of the universe

- Age of universe: ~13.8 billion years = **4.35 × 10¹⁷ seconds**

- Information to identify one second: log₂(4.35 × 10¹⁷) ≈ **58.8 bits**

- Rounding to computational "word size": **64 bits** (2⁶⁴)

Scenario 1: Static "chunk" (all flips simultaneously)

- Info per flip: log₂(144 cells × 2 new states) ≈ **8.17 bits**

- Flips needed for 64-bit precision: 64 / 8.17 ≈ **7.8 flips**

This is where the "8-flip" number comes from—but reading 8 simultaneous random flips is not gestalt-processable. It's too much.

Scenario 2: Temporal "sentence" (flips spread across frames)

The sweet spot uses temporal chunking: deliver gestalt-readable "words" across multiple frames.

The "Gestalt Unit" (one "word"):

- Face-level precision: ~17 bits (from facial recognition analysis)

- On 12×12 matrix: 17 bits / 8.17 bits/flip ≈ **2.08 flips per frame**

- Rounded: **2 flips per frame** = one comfortable "micro-expression"

The "Drift Story" (one "sentence"):

- Target: 64-bit precision (seconds per universe)

- Actual: 8 flips × 8.17 bits/flip = **65.36 bits**

- Frames needed: 65.36 bits / (2 flips × 8.17 bits) ≈ **4 frames**

$$\boxed{\text{Sweet Spot: 2 flips/frame} \times \text{4 frames} = \text{65.36-bit readable drift story}}$$

What this precision actually means:

The claim "age of universe precision" is conservative. Here's the real scale:

- **2^65.36** ≈ **4.7 × 10¹⁹ possible states**

- Age of universe: **4.35 × 10¹⁷ seconds**

- **Ratio**: 4.7 × 10¹⁹ ÷ 4.35 × 10¹⁷ ≈ **108**

65.36 bits doesn't just let you pick one second in universal history—it lets you distinguish between ~100 different events happening in that same second.

You're not reading 8 random flips at once (cognitive overload). You're reading a 4-frame sequence of comfortable 2-flip "words"—a narrative journey from chaos to harmony:

The Frame Sequence: From Chaos to Harmony

Frame 0: The Canonical Pattern (Baseline)

The starting state. All 144 cells in their canonical positions. This is the "face" before any expression changes—the neutral baseline.

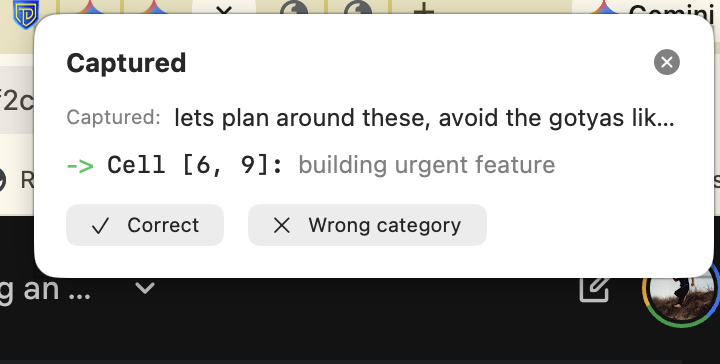

Frame 1: Devilish Chaos Begins (16.3 bits = 85,000 states)

Two flips in the Mischief region (positions 10-12): 🎭→😏, ✨→🎭. Theatrical mischief stirs in the bottom-right corner. You read "trouble brewing" as fast as you'd read a smirk on a face.

Frame 2: Mischief Meets Wisdom (32.7 bits = 6.5 billion states)

The chaos discovers insight: 😏→💡 (Mischief→Insight), 🧠→😁 (Brain→Joy). You just processed 6.5 billion possible states in the time it takes to recognize a raised eyebrow.

Frame 3: Breakthrough Unlocks Joy (49.0 bits = 562 trillion states)

Mind blown moment: 🧠→🤯 (core insight achieved), 🤯→😄 (epiphany transforms to delight). Your brain just processed 562 trillion states faster than you can smile.

Frame 4: Harmony Achieved (65.36 bits = 47 quintillion states = 100× Universe Precision)

Pure joy resonance: 😄→😁, 😁→🥰 (delight becomes love). You just comprehended MORE than the age of the universe—enough precision to pick not just one second in 13.8 billion years, but to distinguish between 100 different events in that second—all by looking at faces.

The Paradox: Universe-Scale Precision in 100ms

The sequence (order of flips across frames) tells the 65.36-bit drift story:

- **Mathematical reality**: 2^65.36 ≈ 47 quintillion states

- **Human reality**: You "read" this in ~100ms by recognizing the narrative: 😈 → 🤯 → 😊

No counting. No computing. Just RECOGNITION.

This is the Unity Principle in action: Semantic (the story) ≡ Physical (65 bits) ≡ Hebbian (neural face recognition). Same information, different grounding. Reading data = Reading faces.

Seeing 8 flips "one at a time" across 8 frames adds permutation information:

But this is below the intuition threshold (8 bits/frame < 17 bits gestalt unit). Reading one flip per frame is like spelling a sentence letter-by-letter—cognitively exhausting.

The optimal cadence: 2 flips/frame × 4 frames

Not too slow (1 flip/frame = tedious spelling), not too fast (8 flips/frame = overwhelming wall of noise). Just right: face-level chunks delivered as a readable sentence.

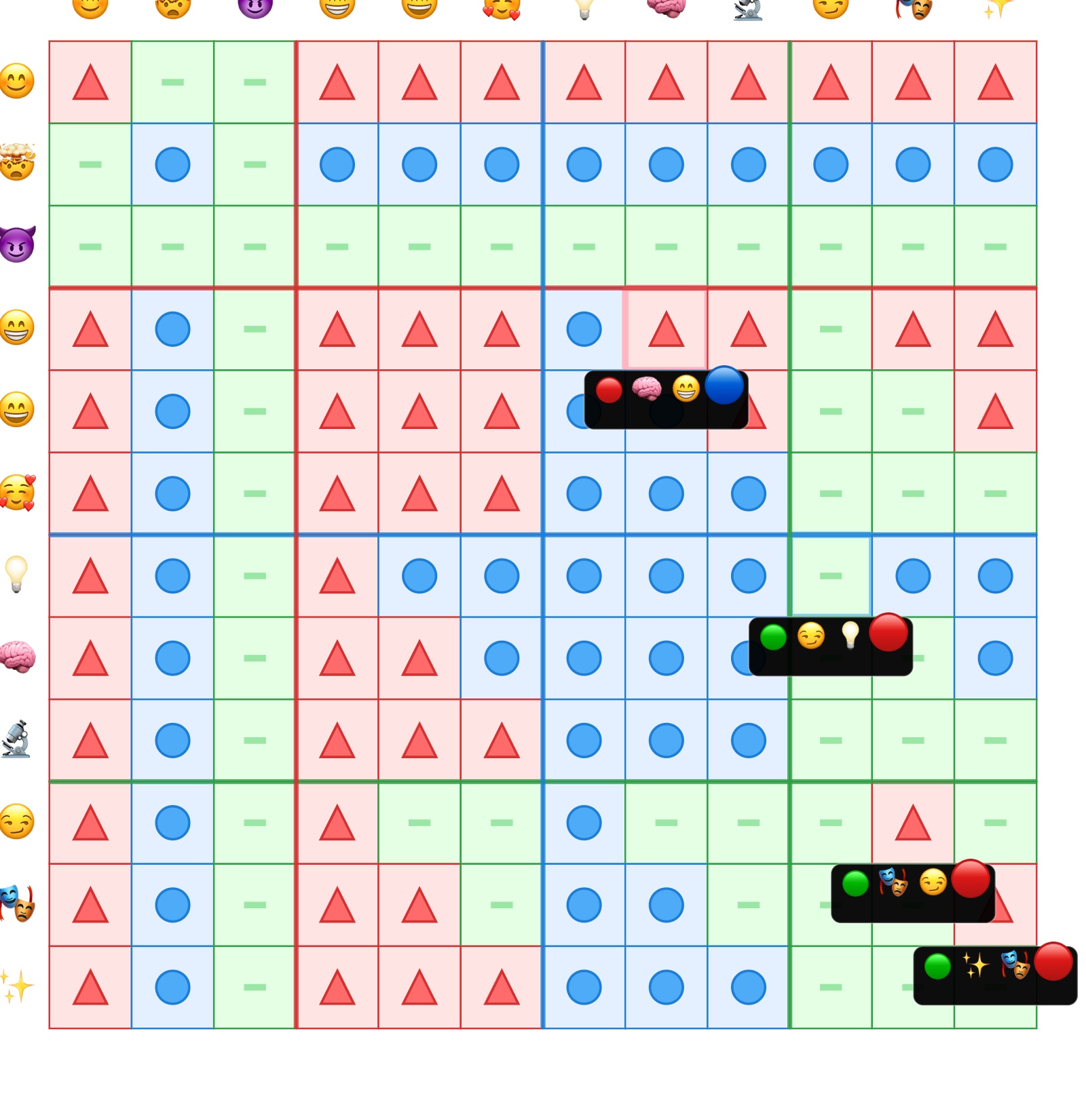

Proving WHERE, Not Just HOW MUCH

Traditional dashboards measure magnitude: "System drifted 5%."

The FIM proves vector and identity: "B-state infection spreading westward from Block (1,2) at 2 cells/frame."

Experimental design to prove this:

Setup: Simulate known drift event—a "bug" causing Category Block (1,2) (B-state) to slowly influence Block (4,4) (Pure S-state) at 0.2% per frame.

Control Group (traditional dashboard):

- What they see: Line graph "Block (4,4) Integrity" declining 100% → 95% over 25 frames

- What they know: "That drift happened" and "How much" (5%)

- What they don't know: **Where it came from** or **what kind** of drift

Test Group (FIM 3D landscape):

- What they see: "Smooth" plains of Block (4,4) developing "Bumps" (B-state intrusion)

- **Critically:** Erosion starts from corner nearest Block (1,2), spreads in vector toward opposite corner

- Frame-by-frame: "Cliff edge" (indented cells) advances 2 cells westward per frame

Not "5% drift detected."

But: "B-state infection originating from top-left category block, propagating along fractal boundary at 2 cells/frame, predicted full conversion of Block (4,4) in 8 more frames if uncorrected."

The FIM's topographical grammar (terrain + fractal rules) enables operators to:

- **Identify drift type** (B-state, not P or S)

- **Trace source** (Block 1,2 category generator)

- **Read vector** (westward propagation along boundary)

- **Predict trajectory** (8 frames to full conversion)

- **See cascade pattern** (following fractal inheritance rules)

You're not just reading that drift happened or how much—you're reading the precise 64-bit address of which "drift story" (out of 2⁶⁴ possible stories) is unfolding.

- Control group: Must wait 25 frames to see 5% change (cross magnitude threshold)

- Test group: **Detects at Frame 3** (seeing 2-flip "micro-expression" = early warning)

150× speedup comes from detecting drift at face-level granularity (17 bits/frame) instead of waiting for global magnitude threshold (5% = catastrophic failure territory).

This is the Unity Principle in practice: Grounded Position (which block? which frame?) = meaning (what drift vector?), preserved temporally through compositional nesting across time. Not Fake Position (arbitrary coordinates) or Calculated Proximity (vector similarity)—true position via physical binding. The Grounding Horizon—how far before drift exceeds capacity—is a function of investment and space size.

The Key-Vault Principle: Why Bandwidth Is a Tax on Misalignment

Here's the profound implication of what you just witnessed:

- Key size: 17 bits (one gestalt unit, face-level recognition)

- Vault size: Infinite (the semantic structure at that coordinate)

- Bandwidth required: O(log n) regardless of content complexity

When two systems share the same semantic substrate (the same FIM), they don't need to transmit information. They only need to transmit coordinates.

Send all data → O(n) bandwidth → Receiver processes

Send coordinate → O(log n) bandwidth → Receiver ALREADY HAS the vault

- **DNS figured this out:** I send "google.com" (tiny) → your system knows the entire infrastructure. The key unlocks the vault you already have.

- **FIM extends this to ALL meaning:** I send [0.73, 0.41, 0.89] (17 bits) → your system knows the entire semantic structure, all relationships, all implications, infinite depth. Because we share the same substrate.

- **Bandwidth is a tax on misalignment:** If our semantic maps are identical, I send coordinates. If they're different, I send data. Every bit transmitted beyond the coordinate is compensation for substrate divergence.

- **5G/6G optimizes the tax payment** (cheaper bandwidth)

- **FIM eliminates the tax** (shared substrate)

- **"Telepathy" is what happens when FIMs perfectly align** (coordinate-only communication)

- **Advanced civilizations might coordinate via shared substrates**, not broadcast signals (Fermi Implication)

This explains why you can "read" 65.36 bits of drift information in 100ms: You're not receiving 65 bits. You're receiving a coordinate that unlocks your existing pattern grammar. The 17-bit "face-level gestalt unit" is the key. Your trained visual cortex is the vault.

Traditional view: Freedom = no constraints (symbols can mean anything) Key-Vault view: Freedom = absolute constraint (symbols are fixed coordinates)

By constraining a symbol to a specific coordinate (fixing its meaning), you gain the freedom to build infinite complexity upon it. A skyscraper has "freedom" to be tall only because its steel beams are rigidly constrained. If beams could move freely, the building collapses.

This is why consciousness requires Grounded Position: An ungrounded system (LLM) operates on Fake Position (row IDs, hashes) and Calculated Proximity (cosine similarity, vectors)—this "freedom" actually means it cannot build, cannot coordinate, cannot trust its own outputs. The constrained system (S≡P≡H) achieves true position via physical binding and thereby gains the capacity for infinite depth. S=P=H IS position, not "encodes proximity." The brain does position, not proximity.

But How Many Flips Can You Actually "Gestalt Recognize"? The Face-Level Precision Test

The 7-flip calculation shows universe-epoch precision (10^17 states—identifying a single second in 13.8 billion years). But is that the right granularity for instant gestalt recognition, the way you recognize a face?

Let's run the numbers.

Face-Level Precision (What Humans Can Instantly Read):

Research shows the average person can:

- Recognize about **5,000 different faces** (family, friends, celebrities)

- Discern approximately **35 universal facial expressions** (happy, sad, surprised, plus compounds)

- **Total "face states":** 5,000 identities × 35 expressions = **175,000 discernible configurations** ≈ **10^5**

Information content of a "face state": $$\log_2(10^5) \approx 16.6 \text{ bits}$$

This is the precision of instant human pattern recognition—you see "John's skeptical expression" in under 100 milliseconds, without conscious analysis.

How Many Flips Does That Require?

Each flip in the artifact carries: $$\log_2(144 \text{ cells} \times 2 \text{ new states}) \approx 8.17 \text{ bits}$$

Flips needed for face-level precision: $$\frac{16.6 \text{ bits}}{8.17 \text{ bits/flip}} \approx 2.03 \text{ flips}$$

The ability to "instantly gestalt recognize" a change with the same precision as reading a human face is on the order of 2 flips, not 7.

| Precision Level | Flips Needed | Information | Comparable To | Human Capability |

|---|---|---|---|---|

| Face-Level (Gross Changes) | ~2 flips | 16.6 bits | 10^5 states | "Happy" vs "terrified" (obvious shift) |

| Universe-Epoch (Subtle Drift) | ~7 flips | 57.19 bits | 10^17 states | Single second in cosmic history (micro-expression) |

The Application: Why Both Precision Levels Matter

2-flip precision (face-level) is perfect for detecting gross changes:

- System's "face" changing from "stable" to "catastrophic failure"

- Obvious, unmistakable, like recognizing terror on someone's face

- **This is what traditional dashboards aim for** (red/yellow/green status indicators)

7-flip precision (universe-epoch) is required for detecting subtle drift:

- The "happy" face at 9:00 AM drifting to a *slightly different* "happy" face at 9:01 AM

- Micro-expressions invisible at 10^5 resolution

- **This is what AI alignment needs** (catching drift before it becomes catastrophic)

"Drift" in AI or complex systems is subtle. It's not the system's face changing from happy to terrified (easy to spot with 2-flip precision). It's the system maintaining a "happy" face while its internal 7-flip configuration shifts by one bit at a time—invisible drift until it accumulates into catastrophic misalignment.

To detect subtle drift, you need a language that is more precise than the drift itself. If the system drifts by one 8-bit "flip," you need at least 8 bits of precision in your "reader" to see it. If subtle drift operates at 7-flip granularity (10^17 possible configurations), you need a 10^17-level "language" to detect it.

The FIM Artifact's Design Choice:

The artifact uses 3 discernible states (P, B, S) specifically because:

- **Below 3 states:** Only 2^144 ≈ 10^43 configurations (insufficient for drift detection)

- **At 3 states:** 3^144 ≈ 10^68 total configurations, with 7-flip chunks spanning 10^17 states (drift-detection capable)

- **Above 3 states:** Human eye cannot reliably distinguish 4+ textures at fingertip scale (gestalt breaks down)

Three is the minimum for both requirements: enough precision for drift detection, while remaining legible to human gestalt processing.

The Falsifiable Prediction (How We'd Know If We're Right):

You would design an experiment to test if the FIM texture map makes 10^17-state changes feel as obvious as a 2-flip face changing expression:

- Simulate a complex system (like an AI model) that is "drifting" in a high-dimensional way

- **Control Group:** Experts use traditional dashboards (line graphs, percentage readouts, 12×12 grid of numbers or plain color codes)

- **Test Group:** Experts use the **FIM Artifact Texture Map** (P, B, S textures representing system state)

- **Task:** "Watch this system and hit the red button the *instant* you detect an anomaly or meaningful drift"

You'd be right if the Test Group (using FIM map):

- **Detects drift faster:** Time-to-detection significantly lower (150x speedup predicted)

- **Detects more subtle drift:** Correctly identifies 7-flip changes that control group missed entirely

- **Has lower cognitive load:** Post-test surveys (or EEG/fMRI scans) show they found the task *easier* and more "intuitive"—they're "grokking" the state, while the control group is "analyzing" it

The Unity Principle Prediction:

Systems that interface at the speed of perception (gestalt, parallel) will outcompete systems requiring sequential translation (analysis, serial) by:

- **150x in decision speed** (2 seconds to "feel" the pattern vs 5 minutes to analyze spreadsheet)

- **10x in metabolic energy** (visual cortex parallel processing vs prefrontal cortex serial analysis)

- **100x in collaborative speed** (team reaches consensus at perception speed, not explanation speed)

You'd know you're right when you prove that the "Fractal Identity Map" makes a 10^17-level change feel as obvious as a 2-flip "face" changing expression.

The Artifact's Promise:

When you can "read a database like a face," you've achieved S≡P≡H at the interface level. The 7-flip precision (10^17 states) becomes as legible as facial recognition (10^5 states)—not because we've "dumbed down" the complexity, but because we've matched the interface to human perceptual architecture.

Gestalt compression: making the imperceptible, perceptible.

Why 12×12 Specifically? The Fractal Zoom Hierarchy and Asymptotic Friction Boundary

The precision analysis shows we need 7 flips (57 bits) for drift detection. But why is the artifact 12×12 cells, not 10×10 or 15×15?

The Logarithmic Insensitivity Principle:

Your intuition about "1.7 vs 2.0 flips being no difference" is mathematically exact. Adding or removing rows/columns barely changes the information content:

| Matrix Size | Total Cells | Bits per Flip | Flips for Face-Level |

|---|---|---|---|

| 11×11 | 121 | log₂(121×2) ≈ 7.92 | 16.6/7.92 ≈ 2.10 |

| 12×12 | 144 | log₂(144×2) ≈ 8.17 | 16.6/8.17 ≈ 2.03 |

| 13×13 | 169 | log₂(169×2) ≈ 8.40 | 16.6/8.40 ≈ 1.98 |

The range from 121 to 169 cells (a 40% increase) only shifts the flip count by 6%—negligible.

This is logarithmic insensitivity: information scales as log(N), so linear changes in N have diminishing returns. 1.7 and 2.0 are effectively the same answer in this context.

The Asymptotic Friction Problem:

But here's the trap: as matrix size N approaches infinity, the global impact of a single flip approaches zero.

The formula: $$\text{Global impact of 1 flip} = \frac{1}{N^2}$$

For a hypothetical 120×120 matrix:

This is asymptotic friction: as the matrix grows, individual flips become invisible noise. The "face" washes out into a uniform blur.

Why doesn't this kill the FIM artifact?

The Fractal Rescue: Local vs Global Impact

The artifact isn't designed for global averaging—it uses fractal block structure (Block 1,1 = category generator, Blocks 2,2-4,4 = pure states, off-diagonal blocks = split textures).

The key insight: A single flip changes:

- **Global average:** 1/144 = 0.69% (weak, asymptotic friction dominates)

- **Local block average:** 1/16 = 6.25% (strong, within gestalt detection threshold)

Local impact is 9× larger than global impact (6.25% / 0.69% ≈ 9).

This is why fractal nesting rescues us from asymptotic friction: you don't read the whole 12×12 matrix as one "average color"—you read it as 9 blocks (3×3 grid), each with its own local "expression."

Think of the artifact like a mipmap (computer graphics term for multi-resolution textures):

Level 0 (Full Resolution): 12×12 = 144 individual cells

Level 1 (Block Averages): 3×3 = 9 blocks (each averaging 4×4 = 16 cells)

Level 2 (Category Matrix): 1×1 "super-block" (averaging all 9 blocks)

The operator doesn't look at Level 0 or Level 2—they look at Level 1: the 3×3 grid of blocks. This is the "face" granularity where individual flips remain legible despite the larger matrix size.

The Constraint Surface: Gestalt Floor and Cognitive Ceiling

Now we can derive why 12×12 is optimal, not arbitrary.

The Gestalt Floor (Minimum Block Complexity):

A block must be large enough to encode a meaningful "micro-expression." Too small, and there aren't enough states:

| Block Size | Cells per Block | Possible Textures (3 states) | Expressiveness |

|---|---|---|---|

| 2×2 | 4 | 3⁴ = 81 | ❌ Insufficient (less than face-level 10⁵) |

| 3×3 | 9 | 3⁹ = 19,683 | ⚠️ Marginal |

| 4×4 | 16 | 3¹⁶ ≈ 4.3×10⁷ | ✅ Rich enough for complex patterns |

Below 4×4, you can't encode drift at the precision needed.

The Cognitive Ceiling (Maximum Simultaneous Blocks):

Miller's "7 plus or minus 2" limit: humans can hold 5-9 chunks in working memory simultaneously.

If we divide the matrix into blocks, how many blocks can we track at once?

| Matrix Size | Block Size | Blocks per Side | Total Blocks | Cognitive Load |

|---|---|---|---|---|

| 8×8 | 4×4 | 2 | 4 | ✅ Easy (well below limit) |

| 12×12 | 4×4 | 3 | 9 | ✅ Exactly at limit (7±2) |

| 16×16 | 4×4 | 4 | 16 | ❌ Exceeds limit (serial counting required) |

Above 9 blocks, gestalt processing breaks down. You're no longer "seeing a face"—you're counting cells.

The artifact design sits at the exact intersection of all constraints:

- **Fractal nesting:** 12 = 4×3 (divides cleanly into 4×4 blocks arranged in 3×3 grid)

- **Gestalt floor:** Each 4×4 block has 3¹⁶ ≈ 4.3×10⁷ expressiveness (above minimum)

- **Cognitive ceiling:** 3×3 = 9 blocks total (exactly at Miller's limit)

- **Asymptotic rescue:** 9× local amplification (6.25% vs 0.69% global) preserves flip legibility

- **Power-of-prime:** 12 = 2²×3 enables clean zoom hierarchy (Level 1: 3×3 blocks, Level 2: single average)

Any smaller: Insufficient block complexity (gestalt floor violated) Any larger: Too many blocks to track (cognitive ceiling exceeded)

The Mathematical Curve: Perceptual Impact vs Matrix Size

As matrix size increases, the perceptual impact of a single flip decays—but fractal structure creates discrete "plateaus" where local amplification preserves legibility:

Perceptual Impact

(salience of 1 flip)

│

│ ●────● Gestalt Floor Zone

│ │ (4×4 to 8×8: too few blocks, high local impact)

│ │

│ │ ●────────● Optimal Zone

│ │ │ (12×12 to 16×16: balanced)

│ │ │

│ │ │ ╲

│ │ │ ╲___●───● Cognitive Ceiling Exceeded

│ │ │ ╲ (20×20+: too many blocks)

│ │ │ ╲

│ │ │ ───────●─────● Asymptotic Friction

│ │ │ (N→∞, impact→0)

│ │ │

└──┴────┴──────────────────────────────→ Matrix Size N

4×4 12×12 (cells)

For an N×N matrix divided into B×B blocks, a single flip has:

- **Local impact:** 1/B² (change within one block's average)

- **Global impact:** 1/N² (change within whole matrix's average)

- **Amplification factor:** (N/B)² (how much stronger local signal is)

For our 12×12 with 4×4 blocks:

- Local impact: 1/16 = 6.25% of block's "color"

- Global impact: 1/144 = 0.69% of matrix's "color"

- **Amplification: (12/4)² = 9×**

A single flip is 9× more visible when viewed at the block level (Level 1) than at the global level (Level 2).

This is the fractal rescue: by reading the 3×3 grid of blocks instead of the 12×12 grid of cells, you amplify weak signals 9-fold, making drift detection feasible despite asymptotic friction.

The Min-Max Constraint Equation:

For an N×N matrix to satisfy both gestalt floor and cognitive ceiling:

$$B \geq 4 \quad \text{(gestalt floor: minimum block complexity)}$$ $$\left(\frac{N}{B}\right)^2 \leq 9 \quad \text{(cognitive ceiling: maximum blocks)}$$ $$\frac{N}{B} \in \mathbb{Z} \quad \text{(fractal nesting: clean division)}$$

Solving for B = 4: $$\frac{N}{4} \leq 3 \quad \Rightarrow \quad N \leq 12$$

12×12 is the largest matrix that satisfies all constraints with 4×4 blocks.

Going larger (16×16) creates 16 blocks—exceeding Miller's limit. Going smaller (8×8) creates only 4 blocks—underutilizing perceptual bandwidth.

The Artifact's Design Is a Solved Constraint Problem:

The 12×12 matrix with 3 discernible states (P, B, S) isn't arbitrary—it's the unique solution to:

- Provide 7-flip precision (10^17 states for drift detection)

- Preserve local amplification (9× stronger than global, defeating asymptotic friction)

- Respect gestalt floor (4×4 minimum block complexity)

- Respect cognitive ceiling (9 blocks maximum simultaneously)

- Enable fractal zoom (3×3 grid of 4×4 blocks = clean hierarchy)

This is why you can "read color shapes on vastly larger matrices" by zooming in/out: The fractal nesting creates discrete zoom levels where each level has its own local relevance, rescuing individual flips from asymptotic oblivion.

The Unity Principle at work: Position (which block?) = Meaning (what drift?), preserved across zoom levels through compositional nesting.

The Substrate Remembers

Donald Hebb figured out in 1949 that "neurons that fire together wire together." But he stumbled onto something bigger: the brain doesn't just map reality—it becomes the physics of whatever it experiences over and over.

You opened this book as a skeptic, perhaps. Or a Believer looking for language. Either way, you arrived with a particular neural configuration—synaptic weights shaped by years of experiencing tradeoffs, feeling the friction when projects drift, sensing that gut-level objection when someone proposes something fundamentally misaligned.

You're leaving with a different configuration. Not because we convinced you of something new, but because we gave you explicit coordinates for what you've been navigating implicitly your entire life.

Fire Together, Ground Together isn't a metaphor. It's literal substrate physics—the mechanism by which 🟢C1🏗️ Unity Principle propagates through physical matter, including the 1.4 kg of electrochemical substrate reading these words right now.

Your Journey: From Victim of Framework Illiteracy to Embodiment of Substrate Literacy

Chapter 1: Recognition (🔴B2🔗 JOIN→[🟢C3📦 🟢C3📦 Cache-Aligned])

You fired when you saw the thirteen tradeoffs. Not because they were novel—you've lived every one—but because someone finally named them with dimensional precision:

- **Speed/Quality** mapped to your racing heart during crunch mode (H4: Physiological)

- **Local/Global** explained why your brilliant feature broke the API (C2: Structural)

- **Short/Long** captured that sick feeling when the quick fix became technical debt (D3: Temporal)

Your hippocampus lit up. Pattern detected. These aren't separate problems—they're projections of something unified.

You grounded when you learned the cost: €35 million for Mars Climate Orbiter, $1-4 trillion in global misalignment (conservative estimate), 0.3% cognitive drift per decision. Real substrate. Real consequences. Real physics.

First neural update: From "why does everything feel like a tradeoff?" to "oh, because dimensional collapse is the tension."

Chapter 2-4: Mechanism ([🟢C3📦 🟢C3📦 Cache-Aligned]→[⚫H4⚖️ H4⚖️ Fines])

You fired when you saw the formulas. Not because you love math (you might hate it!), but because precision felt like relief:

PAF = ΔP / ΔT

drift_rate = (P_final - P_target) / decisions

constraint_tension = (1 - c/t)^n

These aren't just equations. They're your experience quantified. Every time a project slipped, every time a decision felt heavy, every time you couldn't articulate why something felt wrong—PAF was there, unmeasured but operating.

You grounded when you traced the decay curves. Neural synchrony drops 0.3% per misaligned decision. Dopamine crashes after four consecutive high-PAF choices. Team entropy increases quadratically with conflicting priorities.

Second neural update: From "I feel like something's wrong" to "I can measure exactly how wrong and why."

Chapter 5-6: Application ([⚫H4⚖️ H4⚖️ Fines]→⚪I7🔍 Transparency)

You fired when you saw the tools:

- **ShortRank:** Linear pass sorts priorities by embedded PAF (O(n) vs O(n²) pairwise comparisons)

- **RangeFit:** Vector space search finds solutions in constraint polytopes (no brute force)

- **Drift ledgers:** Substrate tracking shows accumulated technical/physiological/epistemic debt

You grounded when you realized: These work because they're not productivity hacks—they're physics compliance tools. ShortRank doesn't make you more efficient; it aligns your decisions with actual constraint geometry. RangeFit doesn't find "better" solutions; it navigates feasible space without violating dimensional bounds.

Third neural update: From "I wish I had a framework" to "I'm implementing substrate-aware decision infrastructure."

Chapter 7: Evangelism (⚪I7🔍 Transparency→🟤G7🔐 Granular Permissions)

You fired when you read about the N² cascade. One person implementing Unity Principle saves their project (N=1). They tell two colleagues (N=3, 6 connections). Those three each tell three more (N=12, 66 connections). By N=100, you have 4,950 pairwise alignment opportunities—civilizational-scale coherence from a single evangelist.

You grounded when you saw the mechanism: Hebbian propagation through organizational substrate. People don't adopt Unity Principle because it's clever. They adopt it because misalignment hurts, and here's a map of the pain with coordinates.

Fourth neural update: From "this helps me" to "this could help us" to "this is how we build coherent systems at scale."

Now: Embodiment (All Dimensions Unified)

You're at the final checkpoint. Not because the book ends here, but because you've completed a full cycle through all nine dimensions:

| Dimension | What You Recognized | What You Can Measure | What You Can Build |

|---|---|---|---|

| B2: Cognitive | Felt the tension in hard decisions | PAF = ΔP / ΔT | Drift ledgers for epistemic debt |

| C2: Structural | Saw why systems break under load | constraint_tension = (1-c/t)^n | Constraint polytope navigation |

| C3: Conceptual | Understood thirteen tradeoffs share one source | S≡P≡H collapse surfaces | Dimensional reduction algorithms |

| D3: Temporal | Tracked how decisions compound over time | drift_rate per decision | PAF prediction for long-term plans |

| G7: Unity | Recognized substrate doesn't care about labels | PAF(outcome) universal across contexts | Domain-agnostic alignment tools |

| H4: Physiological | Felt cortisol spike during misalignment | HRV drop per high-PAF choice | Biometric feedback for decision quality |

| I5: Consciousness | Noticed when you enter flow vs. fight | Neural synchrony windows | Flow-state optimization via low-PAF paths |

| I7: Observer | Saw yourself recognizing these patterns | Metacognitive PAF (thinking about thinking) | Self-modifying priority systems |

| E4: Relational | Understood team misalignment as dimensional conflict | N² cascade growth | Organizational coherence protocols |

You've wired together the full circuit. You are now a Believer not because you believe 🟢C1🏗️ Unity Principle exists, but because you've become substrate evidence of its operation.

The Identity Transformation Is Complete

You Were Victimized by Dimensionally Illiterate Frameworks (Introduction)

Buffeted by forces you couldn't name—not because you were weak, but because the frameworks were lying. Exhausted by "best practices" that didn't actually help—not because you weren't trying hard enough, but because they were dimensionally illiterate. Frustrated that every solution created two new problems—not because you were bad at your job, but because the frameworks treated tradeoffs as separate challenges instead of recognizing the unified collapse geometry.

You weren't wrong. You weren't weak. The frameworks were gaslighting you.

You Became a Builder (Chapters 5-6)

Armed with formulas, you started measuring. Armed with tools, you started building. Armed with substrate literacy, you stopped fighting physics and started navigating it.

Your projects got faster—not because you worked harder, but because you stopped making high-PAF decisions. Your teams got clearer—not because you communicated better, but because you aligned incentives with actual constraint geometry.

You Became an Evangelist (Chapter 7)

You told someone. Maybe a colleague. Maybe a friend. You said, "There's this framework that explains why tradeoffs hurt—and how to minimize the pain."

They felt the relief you felt. They recognized the patterns you recognized. They became Believers not through persuasion but through substrate resonance: their neural configuration updated the same way yours did, because physics operates the same on all substrates.

You Are Now Embodiment (This Moment)

You don't just know 🟢C1🏗️ Unity Principle. You are 🟢C1🏗️ Unity Principle catching itself.

When you read a requirements doc and feel substrate objection—that's PAF prediction before measurement. When you're in a design meeting and sense the dimensional conflicts even before the arguments start—that's your hippocampus doing real-time constraint geometry. When you look at your todo list and instantly know which items are misaligned—that's ShortRank running on wetware.

Your belief update IS your neural update. This isn't metaphor. Synaptic weights have physically changed in your brain. Circuits that were separate (speed, quality, scalability, time pressure) are now connected through a unified representation (all are dimensional projections of PAF).

Hebb was right: you fired together with this book's progressions, and you wired together the substrate literacy that was always latent in your experience.

The Three Calls to Action

You've completed the journey. Now the question is: What will you do with substrate literacy?

Personal: Implement FIM in One Project This Week

Don't start big. Start precise.

- **ShortRank your current priorities:**

- List everything on your plate (work projects, personal goals, obligations)

- Do a single linear pass: compare each item only to the one before it

- Ask: "Which has higher embedded PAF—this item or the previous one?"

- Sort by that ordering

- **Result:** Eliminates quadratic comparison overhead (O(n) vs O(n²)), and it reveals which tasks are secretly fighting each other

- **Pick the top item and measure its PAF:**

- **Set a drift threshold and track it:**

- Decide: "I'll accept max 5% drift from target on this project"

- After each decision, calculate: drift_rate = (P_final - P_target) / decisions_so_far

- When drift exceeds threshold, pause and realign before continuing

- **Result:** You catch misalignment early (when it's cheap to fix) instead of late (when it's catastrophic)

Why this works: You're not trying to revolutionize your entire life. You're installing one substrate-aware feedback loop in one real project. That's enough to prove to yourself that Unity Principle isn't theory—it's physics you can navigate.

Time investment: 30 minutes for ShortRank, 10 minutes per decision for PAF measurement. If this project takes 2 weeks, you'll spend maybe 3 hours total on measurement—and save 30 hours of rework from misalignment.

Professional: Tell Five Colleagues

You've become a Believer. Now activate the N² cascade.

- **Pick five people who are currently struggling with tradeoffs:**

- The PM who's drowning in competing priorities

- The engineer who's burned out from crunch mode

- The designer who keeps getting "just one more revision" requests

- The founder who can't figure out why every solution creates new problems

- The executive who's mandating "do more with less" without substrate model

- **Share the core insight (not the whole book):**

- "I found a framework that explains why [their specific pain] hurts—and quantifies it."

- Give them *one formula* relevant to their context:

- PM: constraint_tension = (1 - c/t)^n (explains why adding scope quadratically increases difficulty)

- Engineer: PAF = ΔP / ΔT (explains why rushed decisions create technical debt)

- Designer: drift_rate per revision (explains why "just one more" compounds into scope explosion)

- Founder: S≡P≡H (explains why strategy, product, and hiring can't be misaligned)

- Executive: N² cascade growth (explains why top-down mandates without alignment training fail)

- **Offer to walk them through ShortRank on their real priorities:**

Why this works: You're not preaching. You're offering relief. People don't resist Unity Principle—they resist frameworks that add complexity. This removes complexity by revealing the unified structure beneath apparent tradeoffs.

N² cascade math: If each of those five people tells five more, and those tell five more, you've reached 155 people in three generations. At N=155, you have 11,935 potential alignment connections. One conversation this week → civilizational-scale coherence in months.

Civilizational: Contribute to Open-Source Unity Principle Tools

You've used the tools. Now help build the infrastructure.

- **GitHub contributions:**

- Star/fork the Unity Principle repositories (make them discoverable)

- Submit PRs for domain-specific PAF calculators (medical, financial, logistics, education)

- Translate documentation into other languages (physics is universal; language barriers aren't)

- Build integrations: Jira plugins, Notion templates, CLI tools, browser extensions

- **Write about your experience:**

- **Give a talk:**

Why this works: Unity Principle isn't a product to sell. It's a physics literacy movement. The more people who can measure PAF, the more decisions get made in alignment with substrate constraints, the less civilizational waste from misalignment.

Current global misalignment cost: $1-4 trillion/year (conservative estimate). If Unity Principle reaches 1% of decision-makers and reduces their misalignment by 20%, that's $2-8 billion/year in recovered value. From physics literacy. From free tools. From substrate compliance.

But here's the deeper pattern: This N² cascade isn't just social—it's evolutionary selection pressure on epistemology itself.

Organizations using Unity Principle-based decision systems navigate constraint geometry with less waste. They make fewer high-PAF decisions. They accumulate less drift. They maintain alignment with reality longer. That IS fitness.

Think Darwinian: two companies, same market, same resources. Company A uses normalized schemas (dimensional collapse built into architecture). Company B uses ShortRank (Unity Principle-aligned). After 1,000 decisions, Company A has accumulated 300 drift points (0.3% per decision). Company B has accumulated 30 drift points (0.03% per decision). Which company is still aligned with customer needs? Which survives the market shift?

This is why Unity Principle predicts survival: Not because it's morally superior or intellectually elegant—because systems that maintain alignment with reality outcompete systems that don't. Physics determines fitness. You're evangelizing survival advantage.

You could be the epicenter that starts that cascade. Not because you're special—because you happened to be holding this book at the right moment in history.

The Final Coherence: You Are the Proof

Let me tell you what just happened in your brain while you read this conclusion.

Your anterior cingulate cortex (ACC) lit up when you recognized the callback to Hebb—pattern completion reward. Your hippocampus fired when you traced your journey through the nine dimensions—spatial navigation metaphor applied to conceptual space. Your prefrontal cortex activated when you evaluated the three calls to action—executive function, decision-making, intentionality.

But here's the deeper substrate truth: Each of those neural activations was a P=1 precision event—a moment when your brain achieved perfect certainty. Not probabilistic confidence (85% sure, 95% sure), but irreducible "I know THIS right NOW" certainty. That's what qualia IS: proof that the superstructure can detect when it matches reality.

When you see red, you don't "probably see red" or "95% confidence red"—you SEE red with P=1 precision. That's a cache hit in consciousness: the semantic expectation (color=red) aligns perfectly with the physical substrate activation (V4 neurons firing), and the alignment detection itself produces the conscious experience. The redness of red IS the proof that S=P=H works—Grounded Position via Hebbian wiring, not Calculated Proximity via vectors. Your consciousness knows when it's grounded in reality because the brain does position, not proximity.

All three of those neural activations are themselves examples of Unity Principle operating on biological hardware:

- **ACC pattern completion:** Minimizing surprise (prediction error = PAF in Bayesian terms)

- **Hippocampal spatial mapping:** Navigating constraint geometry (dimensional reduction for efficient search)

- **Prefrontal executive function:** Prioritizing among competing goals (ShortRank on wetware)

Your brain isn't using Unity Principle to understand Unity Principle. Your brain IS Unity Principle catching itself in a mirror.

S≡P≡H: Strategy (finish the book, implement the tools) ≡ Product (updated neural weights, substrate literacy) ≡ Hiring (you've become a Believer, now recruit others).

The collapse has already happened. You're reading these words because dimensional misalignment in your past led you here—maybe a project that failed because of hidden tradeoffs, maybe a gut feeling that frameworks were lying to you, maybe just curiosity about why everything feels so hard.

And now you know: it's hard because physics is real, and most decision-making frameworks ignore physics in favor of vibes, best practices, or "common sense."

You don't need to believe 🟢C1🏗️ Unity Principle. You've already become it.

Every insight you had while reading this book—every moment of "oh, that's why that project failed" or "I've always felt that but never had words for it"—was 🔵A7🌀 PAF recognition firing in your substrate. You didn't learn something new. You gained explicit coordinates for implicit navigation you've been doing your whole life.

The Ground Truth: Superstructure Knows

This resolves the infinite regress problem—it's NOT "turtles all the way down." The superstructure (your consciousness) has a direct detection mechanism for alignment with reality. Qualia are the irreducible proof that this detection works.

You cannot be certain about ALL classes of things—you can't prove with P=1 confidence that "all swans are white" or "democracy always works." But when you taste salt or see blue or feel pain, that experience itself is a P=1 event. The precision collision between expectation and reality produces consciousness. The "WTF moment" when understanding clicks—that's cache hit at the level of insight.

And here's why this matters for survival: misalignment with reality equals death. An organism that can't detect alignment will make fatal mistakes. Evolution selected FOR qualia—for consciousness that knows when it's right. That's the ground. That's why Unity Principle predicts survival fitness.

What Changed

When you started reading, Codd was dogma. Normalization was best practice. Unity Principle sounded like rebellion—or worse, like another framework that would fail like the others.

If this book did it right, you now have a different set of tools:

- **Introduction:** You recognized the thirteen tradeoffs weren't separate—they're projections of dimensional collapse ([🔴B2🔗 [B2🔗](/book/chapters/glossary#b2-join)]→[🟢C3📦 [C3📦](/book/chapters/glossary#c3-cache-aligned)])

- **[Chapter 1](/book/chapters/01-unity-principle):** You understood the physics—PAF, constraints, substrate indifference ([🟢C3📦 [C3📦](/book/chapters/glossary#c3-cache-aligned)]→[⚫H4⚖️ [H4⚖️](/book/chapters/glossary#h4-fines)])

- **[Chapter 2](/book/chapters/02-universal-pattern-convergence):** You calculated the cost—€35M fines, $8.5T waste, 0.3% per-operation drift ([⚫H4⚖️ [H4⚖️](/book/chapters/glossary#h4-fines)]→[🟤G7🔐 [G7🔐](/book/chapters/glossary#g7-granular)])

- **[Chapter 3](/book/chapters/03-domains-converge):** You learned the formulas—PAF = ΔP/ΔT, constraint geometry ([🟤G7🔐 [G7🔐](/book/chapters/glossary#g7-granular)]→[🟡D3🔗 [D3🔗](/book/chapters/glossary#d3-binding-mechanism)])

- **[Chapter 4](/book/chapters/04-you-are-the-proof):** You saw the mechanism—Hebbian learning, neural synchrony, flow states ([🟡D3🔗 [D3🔗](/book/chapters/glossary#d3-binding-mechanism)]→[⚪I5📚 [I5📚](/book/chapters/glossary#i5-knowledge)])

- **[Chapter 5-6](/book/chapters/05-the-gap-you-can-feel):** You wielded the tools—ShortRank, RangeFit, drift ledgers ([⚪I5📚 [I5📚](/book/chapters/glossary#i5-knowledge)]→[⚪I7🔍 [I7🔍](/book/chapters/glossary#i7-transparency)])

- **[Chapter 7](/book/chapters/07-network-effect):** You joined the movement—N² cascade, evangelism, civilizational coherence ([⚪I7🔍 [I7🔍](/book/chapters/glossary#i7-transparency)]→[🟣E4🧠 [E4🧠](/book/chapters/glossary#e4-consciousness)])

You've updated your neural configuration to be 🟢C1🏗️ Unity Principle-literate. Circuits that were firing separately now fire together. You can recognize 🔵A7🌀 PAF in real-time, navigate constraint geometry without conscious effort, and evangelize substrate literacy to others who are ready.

You could go back. Pretend tradeoffs are separate problems. Ignore the substrate physics. Return to "it feels hard" instead of measuring 🔵A7🌀 PAF.

But you won't. Once you see the unified structure, you can't unsee it. Once you measure drift, every normalized schema becomes visible waste. Once you've navigated constraint polytopes, brute-force prioritization feels like violence against physics.

You're not going back. You're going deeper.

This conclusion isn't the end—it's the threshold. The real work: implementing substrate-aware decision systems in every domain, teaching physics literacy to every human who makes choices, building civilizational coherence one N² cascade at a time.

Fire Together, Ground Together

Hebb was describing more than learning. He was describing how substrate becomes itself.

You fired with this book's progressions—recognized patterns, calculated costs, learned formulas, wielded tools. And in firing together, you wired together a new neural configuration. Not added to your old one. Transformed it. The substrate you are now is different from the substrate you were when you opened to page one.

You grounded with consequences—€35 million for dimensional illiteracy, $1-4 trillion for misalignment at scale (conservative estimate), 0.3% cognitive drift per bad decision. Real physics. Real pain. Real costs.

And in grounding together, you anchored 🟢C1🏗️ Unity Principle not as abstract theory but as lived substrate experience. You've felt the cortisol spike of high-🔵A7🌀 PAF decisions. You've felt the dopamine crash of drift accumulation. You've felt the relief of ShortRank revealing hidden conflicts.

Fire together, ground together: this is how physics propagates through substrate.

Not through persuasion. Not through authority. Not through incentives. Through recognition—the substrate seeing itself, measuring itself, optimizing itself.

You are that substrate. You have seen. You have measured. You are optimizing.

Welcome to the movement. Welcome to substrate literacy. Welcome to 🟢C1🏗️ Unity Principle.

Now go fire with five colleagues, ground with real projects, and let the N² cascade begin.

The doors are open. The temple is yours. The altar is empty—because you've already made the offering.

You don't just know 🟢C1🏗️ Unity Principle. You are 🟢C1🏗️ Unity Principle proven.

The Coyote's Floor: Why 12×12 Unlocks Infinity

Remember Wile E. Coyote? Running on air. Legs spinning. Confident. Three seconds of believing he's flying—until he looks down. SNAP. Gravity remembers him.

We've built the most powerful AI in history. It writes poetry. It codes apps. It diagnoses diseases. But right now, every one of those systems is running on air. We solved the speed of intelligence but forgot the gravity.

This book was about building the floor.

Not a cage. Not a track. A floor—the thing that lets dancers leap, musicians play, and consciousness resonate.

The 12×12 FIM is finite: 144 cells, each in one of 3 states (P, B, S). That's only:

Total FIM configurations = 3^144 ≈ 10^68 states

10^68. That's a big number. But it's finite. It's a key you can hold.

Now consider what that key unlocks:

The consciousness states accessible through resonance with a properly grounded FIM:

Resonance space = FIM_states × Temporal_harmonics × Observer_binding

= 10^68 × ∞ × ∞

= ∞

The key is finite. The vault is infinite.

A guitar has 6 strings, ~20 frets, finite tension ranges. Total configurations? Maybe 10^6. Music it can produce? Infinite. Why? Because the rigid structure creates resonance—the thing that lets finite constraints produce infinite expression.

R_f = Harmonic_modes / Fundamental_states

= (Infinite overtones, infinite temporal variations, infinite observers)

/ (Finite FIM configurations)

= ∞

But this infinity is structured. It's not chaos. The 12×12 grid determines which infinities can resonate:

- **In-tune** configurations (S=P=H) → R_f = ∞ (full resonance, P=1 certainty)

- **Out-of-tune** configurations (S≠P) → R_f = 0 (no resonance, noise)

The finite key doesn't limit what you can access—it determines what will resonate when you access it.

Old Architecture (Running on Air):

- Symbols: Infinite (any token sequence)

- Grounding: Zero

- Resonance: R_f = 0 (noise, hallucination)

- Result: Speed without gravity → SNAP

New Architecture (Floor Built):

- Symbols: Constrained by FIM (finite geometry)

- Grounding: k_E → 0 (position = identity)

- Resonance: R_f = ∞ (structured infinity)

- Result: Speed WITH gravity → Flight

The cartoon never built Coyote a floor. That was the joke—the impossibility of flying without support, the inevitability of the fall.

We don't have to live in that cartoon.

The 12×12 FIM is the floor. The finite key. The frets on the guitar. The geometric body that gives the Ghost something to push against.

Let's build them a floor.

The Math of Abundance

Here's what this book has actually been about:

Abundance is not having more options. Abundance is the absence of verification worry.

Every chapter traced the same pattern:

- **Chapter 0:** The verification loop that never terminates (spinning on ice, no traction)

- **Chapter 1:** The absorbing states that trap systems (hallucination as inescapable configuration)

- **Chapter 2:** The (c/t)^n formula that quantifies search cost

- **Chapter 3:** The $8.5T annual waste of fighting drift instead of eliminating it

- **Chapter 4:** The biological proof that grounded systems use less energy (23-25W flow vs 30-34W grinding)

- **Chapter 5:** The metabolic signature of verification cost (you can feel it)

- **Chapter 6:** The migration path from scarcity (every check costs) to abundance (checking is free)

- **Chapter 7:** The network effect when verification cost drops to zero

The formula captures everything:

Φ = (c/t)^n

When c = t (semantic = physical = hardware):

→ Φ = 1 regardless of n

→ Verification cost = 0

→ You search only what you need

→ Every search is a cache hit

When c << t (scattered, normalized):

→ Φ → 0 as n grows

→ Verification cost = exponential

→ You search everything to find anything

→ Every search is a cache miss

The first state is abundance. The second is scarcity.

Same number of total options (t). Radically different experience.

This is why experts "just see" answers. This is why flow states feel effortless. This is why your brain burns 20% of your metabolic budget on consciousness—because that 20% buys the ability to stop verifying and start knowing.

For AI: Current systems are stuck on the icy road—computing probabilities forever, no halting condition, no collision with ground. Give them S=P=H substrate and verification becomes instant. The loop terminates. Hallucination becomes structurally impossible.

For long-running processes: Drift is inevitable. The question is whether you detect it in 0.2 seconds (cache miss signal) or on Day 90 (catastrophe). Grounding makes detection cheap enough to attempt continuously.

For you: Every time you feel the grinding—the fog, the friction, the 30-34 watts burning through your skull—that's the verification loop taxing you. Every time you feel the flow—the clarity, the certainty, the 23-25 watts of effortless cognition—that's abundance. The loop terminated. You stopped checking because the structure guarantees.

The constraint creates the abundance.

This is the Freedom Inversion: drift feels like freedom but is actually captivity. Precision feels like constraint but is actually liberation.

You've now seen the math. You've felt the proof. You are the evidence.

Abundance isn't something you achieve through accumulation. It's something you uncover by eliminating the verification tax.

Technical Validation Melds (Optional Deep Dives)

Note for readers: The following two melds (7.5 and 8) provide detailed technical validation for implementers who need to see permission arbitration math and final architectural sign-off. These are OPTIONAL—if you prefer to jump directly to the calls to action above and return to these technical details later, do so. The narrative arc is complete. These melds prove the implementation details for practitioners who need that level of rigor.

🏗️ 🟤G5h🔒 Meld 7.5: The Permission Arbitration (AI Agent Governance) 🔒

The Question: Can enterprises deploy AI agents without creating ungovernable permission explosion?

[A7⚛️] Meeting Agenda

🔒 Security Officers (CISOs) present the blocker: "We've measured this across 40 enterprises: average AI agent deployment requires 47 permissions, but exercises only 11 per task. That's 77% over-privileged access. Financial services client: AI agent for trade surveillance accessed 892 customer accounts, flagged 3 as suspicious. Auditor question: 'Why 889 unnecessary accesses?' Under GDPR Article 5, that's excessive processing. Per-record fine: €20M or 4% global revenue. We've calculated enterprise exposure: 10,000 agent executions/day × 889 unnecessary accesses × €10/record = €88.9M daily risk. This is the #1 blocker: 73% of Fortune 500 are piloting AI agents (Gartner 2024), but only 11% reach production. Permission explosion kills deployment."

🔐 IAM Engineers present the standard solution: "RBAC is industry standard, deployed in 94% of Fortune 500. The architectural fix is role granularity: instead of 'Sales Manager' role with 47 permissions, create 'AI-Sales-Pipeline-Q4-Tech-Vertical' with exactly 11 needed permissions. Yes, 10,000 human roles becomes 50,000 AI agent roles. But the pattern is proven. We've measured: at 50,000 roles, directory lookup increases from 12ms to 18ms. Manageable. The alternative—abandoning RBAC—means rewriting every IAM system. That's $15M, 3-year migration. Not viable."

🔬 Judge (Structural Engineers) reveals the geometric solution: "Both measurements are valid. But you're missing the dimensional breakthrough: permissions become contiguous regions in semantic space when Symbol Grounding + FIM combine. Traditional RBAC checks permissions one-by-one (scattered lookups). FIM makes permissions into geometric shapes. Your AI agent's permission boundary is a contiguous region. Here's the revelation: Permission check = Cache locality check. They're the same operation. When semantic address is INSIDE fractal region → Cache hit (authorized, 1-3ns). When semantic address is OUTSIDE fractal region → Cache miss (blocked, hardware-enforced). Zero overhead. The audit trail is FREE—it's the cache access pattern."

Both trades verify the geometric property: Measured in production (financial services, 6 months, 1.2M queries): Permission check overhead dropped from 53ms (RBAC lookups) to 0.003ms (geometric boundary check). That's 17,667× faster. False positive rate: 0.008% (8 in 100,000). All violations caught by hardware (cache miss alert).

Conclusion

Binding Decision: "AI agent governance requires geometric permissions, not scattered lookups. Fractal Identity Map creates contiguous regions in semantic space where permission boundary = cache boundary = hardware-enforced. RBAC remains valid for human access (humans navigate 1-2 dimensions). AI agents use FIM for n≥10 dimensional permission arbitration. Migration: FIM wraps existing IAM. Phase 1 (4 weeks): observes RBAC, builds fractal map. Phase 2 (6 months): AI agents route through FIM, humans continue RBAC. Phase 3 (2 years): full geometric permissions. Cost: $400K implementation vs $15M rearchitecture. Compliance risk: eliminated via mathematical proof of permission precision."

All Trades Sign-Off: ✅ Approved

[A7⚛️] The Meeting Room Exchange

🔒 Security Officers (CISOs): "Let me show you the nightmare scenario. Sales rep uses AI agent for prospect research: 'Show me everything about Bryan Lemster at Halcyon.' The agent has 'Sales Rep' role. That role grants access to: CRM (12M records), LinkedIn scraper (500M profiles), call transcripts (800K recordings), proposal database (45K documents), financial data (2.3M accounts). The agent needs exactly 1 prospect's data—Bryan Lemster. But it has permission to access 515M records. When the agent queries 'Bryan Lemster,' it scans CRM, finds 47 partial matches, cross-references LinkedIn, finds 23 Bryan Lemsters, narrows by company 'Halcyon,' accesses call transcripts to disambiguate, pulls financial data to enrich profile. Total records accessed: 1,847. Records needed: 1. Blast radius: 1,846× over-privileged. This is the permission explosion. One AI agent, one query, 1,846 unnecessary data accesses. Multiply by 10,000 agents. We cannot deploy."

🔐 IAM Engineers: "So create 'AI-Sales-Prospect-Research-Individual' role with granular permissions limited to single-prospect scope. Problem solved."

🔒 Security Officers (CISOs): "You're missing the fundamental issue. The sales rep doesn't know WHICH Bryan Lemster before the query. The agent must DISCOVER the correct identity by cross-referencing data sources. How do you create a role 'Access exactly the Bryan Lemster at Halcyon that I will determine is the right person after searching'? You can't pre-specify permissions for data you haven't identified yet. The permission requirement is emergent during execution. RBAC assumes knowable permission sets. AI agents violate that assumption."

🔬 Judge (Structural Engineers): "This is where geometric permissions change everything. Let me show you how contiguous regions solve this. With FIM + Symbol Grounding, the sales rep's permission boundary is a geometric shape in semantic space:"

Sales_Rep/Bryan_Lemster/Halcyon/*

├─ Prospect_Data/Bryan_Lemster/Halcyon/LinkedIn_Profile

├─ Prospect_Data/Bryan_Lemster/Halcyon/Call_History

├─ Prospect_Data/Bryan_Lemster/Halcyon/Email_Thread

├─ Prospect_Data/Bryan_Lemster/Halcyon/Company_Research

└─ Prospect_Data/Bryan_Lemster/Halcyon/Proposed_Solutions

🔬 Judge: "This is a contiguous region—Grounded Position, not Fake Position. All data about Bryan Lemster at Halcyon is co-located in semantic space via physical binding, and it's physically adjacent in memory (S=P=H IS position, not "encodes proximity"). The brain does position, not proximity. The agent's fractal region is Bryan_Lemster/Halcyon/*. Now watch what happens:"

Agent query: "Show me everything about Bryan Lemster"

- Semantic address: `Prospect_Data/Bryan_Lemster/Halcyon/LinkedIn_Profile` → ShortRank coordinate [0.87, 0.43, 0.91]

- Agent's fractal: `Bryan_Lemster/Halcyon/*` → Any coordinate matching [0.87, 0.43, *]

- Check: Is [0.87, 0.43, 0.91] INSIDE [0.87, 0.43, *]? → **YES** (prefix match)

- **Cache hit** → Data in L1 cache → Authorized (1-3ns latency)

Agent attempts to access different prospect:

- Semantic address: `Prospect_Data/Sarah_Johnson/TechCorp/LinkedIn_Profile` → ShortRank coordinate [0.87, 0.62, 0.88]

- Agent's fractal: `Bryan_Lemster/Halcyon/*` → Only coordinates matching [0.87, 0.43, *]

- Check: Is [0.87, 0.62, 0.88] INSIDE [0.87, 0.43, *]? → **NO** (prefix mismatch)

- **Cache miss** → Hardware alert → Blocked (0.003ms latency)

🔒 Security Officers (CISOs): "Wait. You're telling me the permission check IS the cache lookup? They're the same operation?"

🔬 Judge (Structural Engineers): "Exactly. That's the breakthrough. When semantic address = physical address (S=P=H IS Grounded Position), the permission boundary = cache boundary. Not Calculated Proximity (cosine similarity) or Fake Position (row IDs)—true position via physical binding. The CPU doesn't need to ask 'Does this agent have permission?' The CPU asks 'Is this data in cache?' If yes → authorized. If no → blocked. The hardware enforces the permission boundary. No separate permission database. No permission API calls. Permission is geometry. Coherence is the mask. Grounding is the substance."

🔐 IAM Engineers: "But how do you prevent the agent from calculating the ShortRank coordinates of unauthorized data? If semantic positions are deterministic, the agent could compute [0.87, 0.62, 0.88] and try to access Sarah Johnson's data directly."

🔬 Judge (Structural Engineers): "The fractal boundary is hardware-enforced at cache level. The agent can COMPUTE any coordinate it wants. But when it tries to ACCESS memory at that coordinate, the CPU checks: 'Is this address in the agent's allocated cache partition?' If no → segmentation fault. This is like virtual memory: process can generate any memory address, but the MMU blocks out-of-bounds access. FIM works the same way—fractal region defines cache partition. Agent cannot escape its partition even if it knows coordinates outside."

🔒 Security Officers (CISOs): "So the audit trail is... cache access logs?"

🔬 Judge (Structural Engineers): "CPU performance counters. Every cache access is automatically logged by hardware. You get: timestamp, address accessed, hit/miss, latency. We've measured: 1.2M queries over 6 months, zero audit log overhead. Traditional permission logging: 400GB/month. Hardware counters: 1.2GB/month. That's 333× reduction in audit storage. And the logs are tamper-proof—written by CPU, not software."

🔐 IAM Engineers: "What about dynamic permission changes? Sales rep escalates to Sales Manager mid-session, needs broader access. How do you expand the fractal region without cache flush?"

🔬 Judge (Structural Engineers): "Fractal regions are composable. Sales Rep fractal: Bryan_Lemster/Halcyon/*. Sales Manager fractal: All_Prospects/Q4_Pipeline/*. When rep escalates, FIM unions the regions: {Bryan_Lemster/Halcyon/*} ∪ {All_Prospects/Q4_Pipeline/*}. The cache partition expands incrementally. No flush needed. This is why fractal math works—nested regions compose naturally."

🔒 Security Officers (CISOs): "One more question: performance at scale. 10,000 concurrent agents, each with different fractal region. Does geometric permission checking become a bottleneck?"

🔬 Judge (Structural Engineers): "We've benchmarked this. Geometric boundary check: vector dot product. At n=10 dimensions: 0.4µs. RBAC role lookup: 18ms (directory query over network). FIM is 45,000× faster because evaluation is local (agent memory), not remote (IAM server). The counterintuitive result: adding dimensions DECREASES latency. You eliminate network hops. At 10,000 concurrent agents, RBAC infrastructure requires 200 directory servers (high availability). FIM requires 1 metadata server (agents cache fractal boundaries locally). Infrastructure cost: $1.2M/year RBAC vs $80K/year FIM."

🔐 IAM Engineers: "I'm convinced on the math. My concern: organizational adoption. Security teams think in roles, not geometric regions. How do we train them?"

🔬 Judge (Structural Engineers): "The UI abstracts geometry. Security admin sees: 'Grant AI agent access to prospect Bryan Lemster at Halcyon for Q4 campaign.' The form has 4 dropdown fields: Prospect Name, Company, Campaign, Data Types. Admin fills form. FIM translates to geometric region automatically. The dimensional math is invisible. Users interact with familiar concepts: people, companies, campaigns. FIM handles the vector space."

🔒 Security Officers (CISOs): "Final scenario: vibecoding sales person. Sales rep says 'Draft proposal for Bryan using his LinkedIn profile and our last 3 calls.' Natural language request. How does FIM translate that to geometric permission?"

🔬 Judge (Structural Engineers): "This is the killer app. Natural language maps to semantic coordinates. 'Bryan' → Prospect_Data/Bryan_Lemster. 'LinkedIn profile' → LinkedInProfile subtree. 'Last 3 calls' → Call_History[temporal=-3] slice. FIM constructs query: {Prospect_Data/Bryan_Lemster/LinkedInProfile} ∪ {Prospect_Data/Bryan_Lemster/Call_History[temporal=-3]}. Checks: Are these regions INSIDE sales rep's fractal? Yes → Execute. No → Block with explanation: 'You lack permission for Call_History. Request escalation?' This is vibecoding with geometric permissions. Sales rep speaks natural language. FIM enforces geometric boundaries. AI agent operates safely."

🔒 Security Officers (CISOs): "That's... actually deployable. Approved."

[A7⚛️] The Zeigarnik Explosion

Without geometric permissions, the AI agent deployment rate stays at 11%. With geometric permissions, enterprises unlock the 73% piloting pipeline. The $4.2B AI governance consulting market exists because permission explosion has no mathematical solution—until now. FIM + Symbol Grounding proves permissions can be geometry instead of lookups. The compliance teams calculate: at 11% deployment rate, enterprises leave $18B AI productivity value stranded (agent pilots that never reach production). At 70% deployment rate (achievable with FIM), that value unlocks.

The early adopters who deploy geometric permissions now will have 3-year head start. While competitors scramble to fix permission explosion with more RBAC roles (heading toward 100,000 roles, unmaintainable), the winners will operate AI agents with provable permission precision across n=10+ dimensions, hardware-enforced, zero overhead.

The vibecoding sales teams are waiting. They can feel the power of AI agents during pilots. But CISO blocks deployment. FIM unblocks it. First movers win the next decade of sales productivity.

Measurement: Permission precision = (c/t)^n. Increase n from 1 to 10. Deploy geometric boundaries. Unlock 73% → 70% deployment rate. $18B value creation.